The problem: Limits of traditional search

Classic full-text search based on algorithms like BM25 has several fundamental constraints:

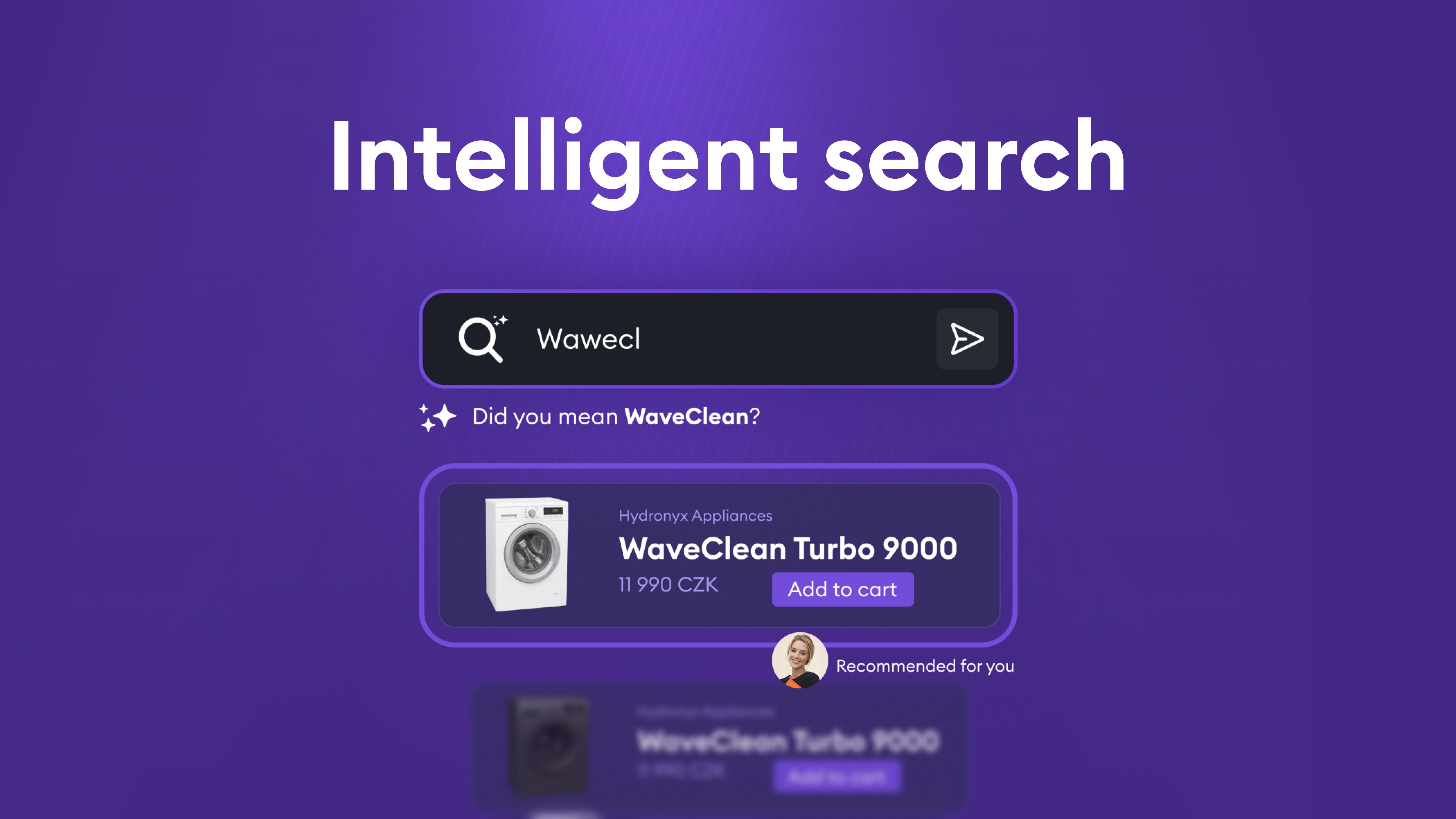

1. Typos and variants

- Users frequently submit queries with typos or alternate spellings.

- Traditional search expects exact or near-exact text matches.

2. Title-only searching

- Full-text search often targets specific fields (e.g., product or entity name).

- If relevant information lives in a description or related entities, the system may miss it.

3. Missing semantic understanding

- The system doesn’t understand synonyms or related concepts.

- A query for “car” won’t find “automobile” or “vehicle,” even though they are the same concept.

- Cross-lingual search is nearly impossible—a Czech query won’t retrieve English results.

4. Contextual search

- Users often search by context, not exact names.

- For example, “products by manufacturer X” should return all relevant products, even if the manufacturer name isn’t explicitly in the query.

The solution: Hybrid search with embeddings

The remedy is to combine two approaches: traditional full-text search (BM25) and vector embeddings for semantic search.

Vector embeddings for semantic understanding

Vector embeddings map text into a multi-dimensional space where semantically similar meanings sit close together. This enables:

- Meaning-based retrieval: A query like “notebook” can match “laptop,” “portable computer,” or related concepts.

- Cross-lingual search: A Czech query can find English results if they share meaning.

- Contextual search: The system captures relationships between entities and concepts.

- Whole-content search: Embeddings can represent the entire document, not just the title.

Why embeddings alone are not enough

Embeddings are powerful, but not sufficient on their own:

- Typos: Small character changes can produce very different embeddings.

- Exact matches: Sometimes we need precise string matching, where full-text excels.

- Performance: Vector search can be slower than optimized full-text indexes.

A hybrid approach: BM25 + HNSW

The ideal solution blends both:

- BM25 (Best Matching 25): A classic full-text algorithm that excels at exact matches and handling typos.

- HNSW (Hierarchical Navigable Small World): An efficient nearest-neighbor algorithm for fast vector search.

Combining them yields the best of both worlds: the precision of full-text for exact matches and the semantic understanding of embeddings for contextual queries.

The challenge: Getting the ranking right

Finding relevant candidates is only step one. Equally important is ranking them well. Users typically click the first few results; poor ordering undermines usefulness.

Why simple “Sort by” is not enough

Sorting by a single criterion (e.g., date) fails because multiple factors matter simultaneously:

- Relevance: How well the result matches the query (from both full-text and vector signals).

- Business value: Items with higher margin may deserve a boost.

- Freshness: Newer items are often more relevant.

- Popularity: Frequently chosen items may be more interesting to users

Scoring functions: Combining multiple signals

Instead of a simple sort, you need a composite scoring system that blends:

- Full-text score: How well BM25 matches the query.

- Vector distance: Semantic similarity from embeddings.

- Scoring functions, such as:

- Magnitude functions for margin/popularity (higher value → higher score).

- Freshness functions for time (newer → higher score).

- Other business metrics as needed.

The final score is a weighted combination of these signals. The hard part is that the right weights are not obvious—you must find them experimentally.

Hyperparameter search: Finding optimal weights

Tuning weights for full-text, vector embeddings, and scoring functions is critical to result quality. We use hyperparameter search to do this systematically.

Building a test dataset

A good test set is the foundation of successful hyperparameter search. We assemble a corpus of queries where we know the ideal outcomes:

- Reference results: For each test query, a list of expected results in the right order.

- Annotations: Each result labeled relevant/non-relevant, optionally with priority.

- Representative coverage: Include diverse query types (exact matches, synonyms, typos, contextual queries).

Metrics for quality evaluation

To objectively judge quality, we compare actual results to references using standard metrics:

1. Recall (completeness)

- Do results include everything they should?

- Are all relevant items present?

2. Ranking quality (ordering)

- Are results in the correct order?

- Are the most relevant results at the top?

Common metrics include NDCG (Normalized Discounted Cumulative Gain), which captures both completeness and ordering. Other useful metrics are Precision@K (how many relevant items in the top K positions) and MRR (Mean Reciprocal Rank), which measures the position of the first relevant result.

Iterative optimization

Hyperparameter search proceeds iteratively:

- Set initial weights: Start with sensible defaults.

- Test combinations: Systematically vary:

- Field weights for full-text (e.g., product title vs. description).

- Weights for vector fields (embeddings from different document parts).

- Boosts for scoring functions (margin, recency, popularity).

- Aggregation functions (how to combine scoring functions).

- Evaluate: Run the test dataset for each combination and compute metrics.

- Select the best: Choose the parameter set with the strongest metrics.

- Refine: Narrow around the best region and repeat as needed.

This can be time-consuming, but it’s essential for optimal results. Automation lets you test hundreds or thousands of combinations to find the best.

Monitoring and continuous improvement

Even after tuning, ongoing monitoring and iteration are crucial.

Tracking user behavior

A key signal is whether users click the results they’re shown. If they skip the first result and click the third or fourth, your ranking likely needs work.

Track:

- CTR (Click-through rate): How often users click.

- Click position: Which rank gets the click (ideally the top results).

- No-click queries: Queries with zero clicks may indicate poor results.

Analyzing problem cases

When you find queries where users avoid the top results:

- Log these cases: Save the query, returned results, and the clicked position.

- Diagnose: Why did the system rank poorly? Missing relevant items? Wrong ordering?

- Augment the test set: Add these cases to your evaluation corpus.

- Adjust weights/rules: Update weights or introduce new heuristics as needed.

This iterative loop ensures the system keeps improving and adapts to real user behavior.

Implementing on Azure: AI search and OpenAI embeddings

All of the above can be implemented effectively with Microsoft Azure.

Azure AI Search

Azure AI Search (formerly Azure Cognitive Search) provides:

- Hybrid search: Native support for combining full-text (BM25) and vector search.

- HNSW indexes: An efficient HNSW implementation for vector retrieval.

- Scoring profiles: A flexible framework for custom scoring functions.

- Text weights: Per-field weighting for full-text.

- Vector weights: Per-field weighting for vector embeddings.

Scoring profiles can combine:

- Magnitude scoring for numeric values (margin, popularity).

- Freshness scoring for temporal values (created/updated dates).

- Text weights for full-text fields.

- Vector weights for embedding fields.

- Aggregation functions to blend multiple scoring signals.

OpenAI embeddings

For embeddings, we use OpenAI models such as text-embedding-3-large:

- High-quality embeddings: Strong multilingual performance, including Czech.

- Consistent API: Straightforward integration with Azure AI Search.

- Scalability: Handles high request volumes.

Multilingual capability makes these embeddings particularly suitable for Czech and other smaller languages.

Integration

Azure AI Search can directly use OpenAI embeddings as a vectorizer, simplifying integration. Define vector fields in the index that automatically use OpenAI to generate embeddings during document indexing.

.webp)

.avif)