BigHub Blog - Read, Discover, Get inspired.

The latest industry news, interviews, technologies, and resources.

Why MCP might be the HTTP of the AI-first era

What Is MCP – and Why Should You Care?

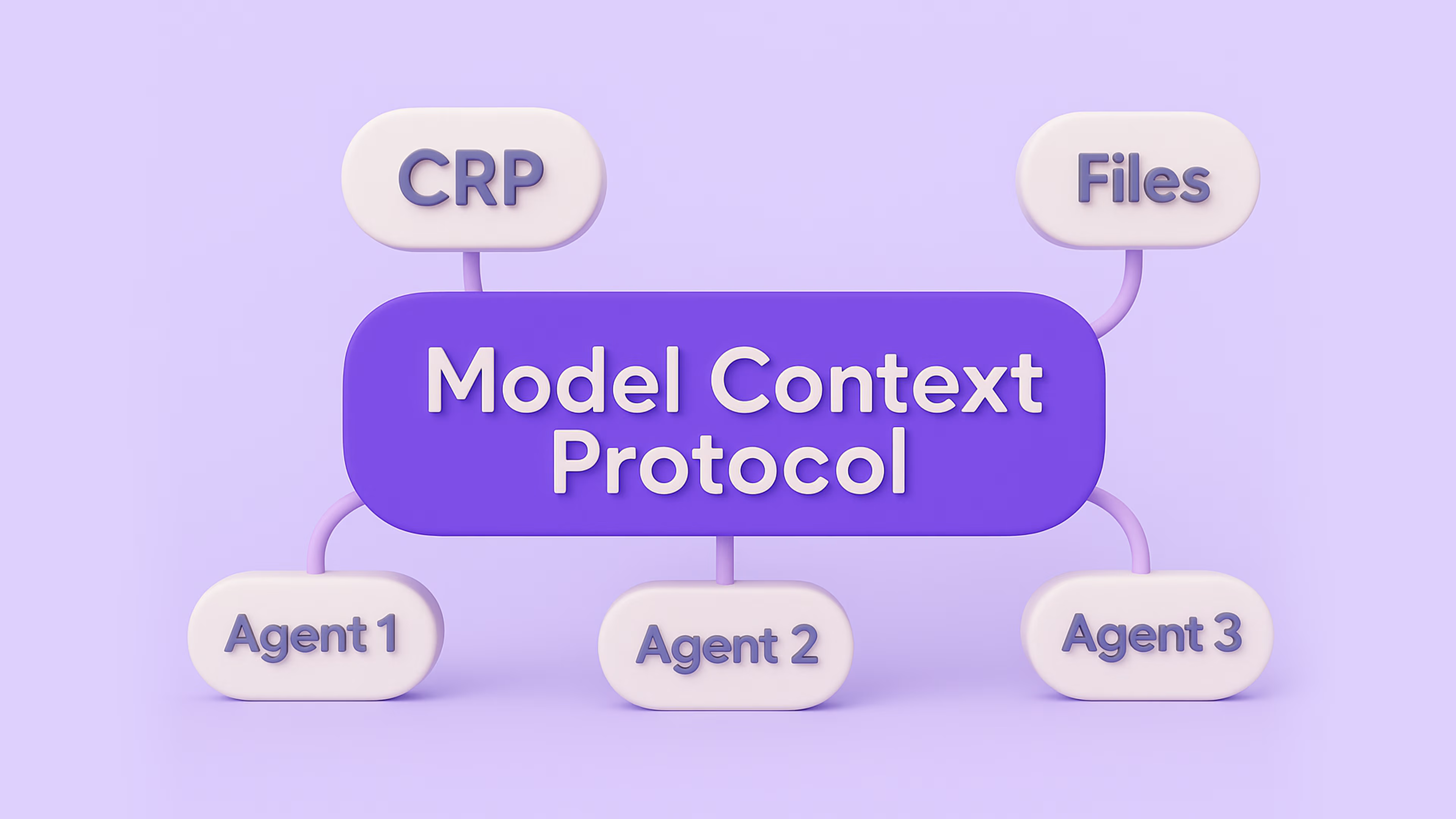

Model Context Protocol may sound like something out of an academic paper or internal Big Tech documentation. But in reality, it’s a standard that enables different AI systems to seamlessly communicate—not just with each other, but also with APIs, business tools, and humans.

Today’s AI tools—whether chatbots, voice assistants, or automation bots—are typically limited to narrow tasks and single systems. MCP changes that. It allows intelligent systems to:

- Check your e-commerce order status

- Review your insurance contract

- Reschedule your doctor’s appointment

- Arrange delivery and payment

All without switching apps or platforms. And more importantly: without every company needing to build its own AI assistant. All it takes is making services and processes “MCP-accessible.”

From AI as a Tool to AI as an Interface

Until now, AI in business has mostly served as a support tool for employees—helping with search, data analysis, or faster decision-making. But MCP unlocks a new paradigm:

Instead of building AI tools for internal use, companies will expose their services to be used by external AI systems—especially those owned by customers themselves.

That means the customer is no longer forced to use the company’s interface. They can interact with your services through their own AI assistant, tailored to their preferences and context. It’s a fundamental shift. Just as the web changed how we accessed information, and mobile apps changed how we shop or travel, MCP and intelligent interfaces will redefine how people interact with companies.

The AI-First Era Is Already Here

It wasn’t long ago that people began every query with Google. Today, more and more users turn first to ChatGPT, Perplexity, or their own digital assistant. That shift is real: AI is becoming the entry point to the digital world.

“Web-first” and “mobile-first” are no longer enough. We’re entering an AI-first era—where intelligent interfaces will be the first layer that handles requests, questions, and decisions. Companies must be ready for that.

What This Means for Companies

1. No More Need to Build Your Own Chatbot

Companies spend significant resources building custom chatbots, voice systems, and interfaces. These tools are expensive to maintain and hard to scale.

With MCP, the user shows up with their own AI system and expects only one thing: structured access to your services and information. No need to worry about UX, training models, or customer flows—just expose what you do best.

2. Traditional Call Centers Become Obsolete

Instead of calling your support line, a customer can query their AI assistant, which connects directly to your systems, gathers answers, or executes tasks.

No queues. No wait times. No pressure on your staffing model. Operations move into a seamless, automated ecosystem.

3. New Business Models and Brand Trust

Because users will bring their own trusted digital interface, companies no longer carry the burden of poor chatbot experiences. And thanks to MCP’s built-in structure for access control and transparency, businesses can decide who sees what, when, and how—while building trust and reducing risks.

What This Means for Everyday Users

- One interface for everything

- No more juggling dozens of logins, websites, or apps. One assistant does it all.

- True autonomy

- Your digital assistant can order products, compare options, request refunds, or manage appointments—no manual effort required.

- Smarter, faster decisions

- The system knows your preferences, history, and goals—and makes intelligent recommendations tailored to you.

Practical example:

You ask your AI to generate a recipe, check your pantry, compare prices across online grocers, pick the cheapest options, and schedule delivery—all in one go, no clicking required.

The Underrated Challenge: Data

For this to work, users will need to give their AI systems access to personal data. And companies will need to open up parts of their systems to the outside world. That’s where trust, governance, and security become mission-critical. MCP provides a standardized framework for managing access, ensuring safety, and scaling cooperation between systems—without replicating sensitive data or creating silos.

Why clean data matters (and what It actually means to “have data in order”)

🧠 What does it mean to have your data in order?

It’s more than storing files in the cloud or keeping spreadsheets neat.

When your data is “in order,” it means that:

- It’s accessible – people across the company can access it easily and securely

- It’s high-quality – data is clean, up to date, and consistent

- It has context – you know where the data came from, how it was created, and what it represents

- It’s connected – systems talk to each other, there are no data silos

- It’s actionable – the data supports decision-making, automation, and business goals

In short: Clean data = trustworthy and usable data.

How can you tell if your data isn’t in order?

Here are some common red flags:

These challenges are common—startups, scale-ups, and enterprises all face them at some point.

What are the risks of messy or low-quality data?

Slower decisions

Without confidence in your data, decisions are delayed—or based on gut feeling instead of facts.

Wasted resources

Analysts spend most of their time cleaning and merging data, rather than generating value.

Poor customer experiences

Outdated or fragmented data means poor personalization, errors in communication, or missed opportunities.

Blocked AI and automation efforts

You can’t build predictive models or automation without structured, clean data.

What does it take to “clean up your data”?

Data audit

Map out your data sources, flows, and responsibilities.

Data integration

Connect systems like CRM, ERP, e‑shop, marketing platforms into a unified view.

Implement a modern data platform

Build a central, scalable place to store and manage data (e.g., a data warehouse with BI tools).

Ensure data quality

Remove duplicates, validate formats, ensure consistency.

Define governance

Set clear responsibilities for data ownership, access, and documentation.

What’s the business impact?

✅ A single source of truth

✅ Smarter, faster decision-making

✅ Improved collaboration between departments

✅ Stronger foundations for AI, automation, and personalization

✅ More trust in your reporting and forecasts

Final thoughts: Data isn’t just a cost. It’s an asset.

Many companies treat data as a back-office IT issue. But in reality, data is one of your most valuable business assets—and without having it in order, you can’t grow, digitize, or deliver personalized experiences.

The Evolution of AI Agents frameworks: From Autogen to LangGraph

The Evolution of AI Agents frameworks: From Autogen to LangGraph

In the past, severallibraries such as Autogen and Langchain Agent Executor were usedto create AI agents and the workflow of their tasks. These tools aimed tosimplify and automate processes by enabling multiple agents to work together inperforming more complex tasks. But for the past several months, we have beenworking with LangGraph and felt in love with it for the significantimprovements it offers to AI developers.

Autogen was oneof the first frameworks and provided a much needed higher level of abstraction,making it easier to set up AI agents. However, the interaction between agentsfelt often somewhat like "magic" — too opaque for developers whoneeded more granular control over how the processes were defined and executed.This lack of transparency could lead to challenges in debugging andfine-tuning.

Then came LangchainAgent Executor, which allowed developers to pass "tools" toagents, and the system would keep calling these tools until it produced a finalanswer. It even allowed agents to call other agents, and the decision on whichagent to use next was managed by AI.

However, the LangchainAgent Executor approach had its drawbacks. For instance:

- It was difficult to track the individual steps of each agent. If one agent was responsible for searching Google and retrieving results, it wasn’t easy to display those results to the user in real-time.

- It also posed challenges in transferring information between agents. Imagine one agent uses Google to find information and another is tasked with finding related images. You might want the second agent to use a summary of the article as input for image searches, but this kind of information handoff wasn’t straightforward.

State of the art AI Agents framework? LangGraph!

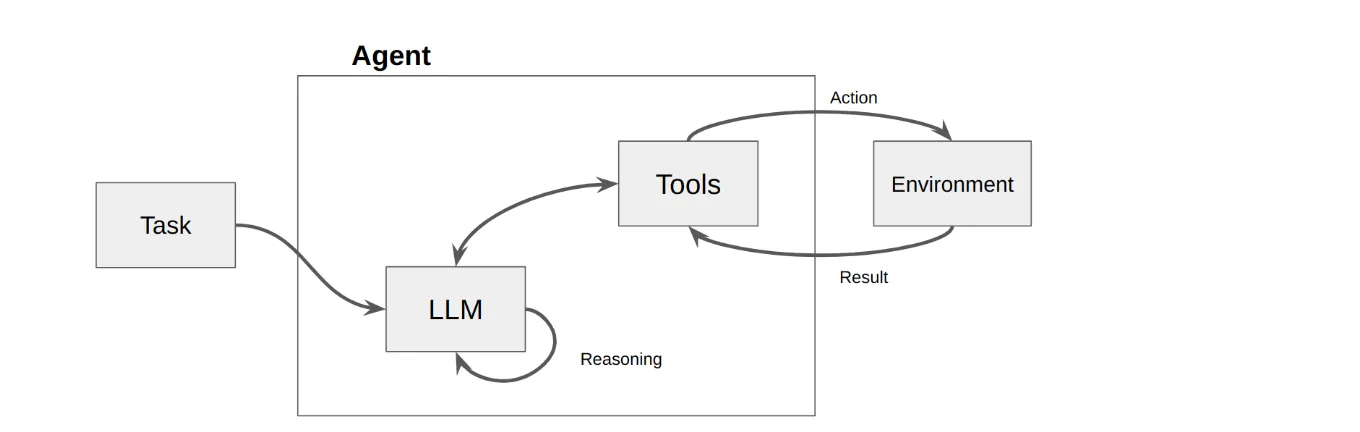

LangGraphaddresses many of these limitations by providing a more modular and flexibleframework for managing agents. Here’s how it differs from its predecessors:

FlexibleGlobal State Management

LangGraph allowsdevelopers to define a global state. This means that agents can eitheraccess the entire state or just a portion of it, depending on their task. Thisflexibility is critical when coordinating multiple agents, as it allows forbetter communication and resource sharing. For instance, the agent responsiblefor finding images could be given a summary of the article, which it could useto refine its keyword searches.

ModularDesign with Graph Structure

At the core of LangGraphis a graph-based structure, where nodes represent either calls to alanguage model (LLM) or the use of other tools. Each node functions as a stepin the process, taking the current state as input and outputting an updatedstate.

The edges in thegraph define the flow of information between nodes. These edges can be:

- Optional: allowing the process to branch into different states based on logic or the decisions of the LLM.

- Required: ensuring that after a Google search, for example, the next step will always be for a copywriting agent to process the search results.

Debuggingand Visualization

LangGraph also enhancesdebugging and visualization. Developers can render the graph, making it easierfor others to understand the workflow. Debugging is simplified throughintegration with tools like Langsmith, or open-source alternatives like Langfuse.These tools allow developers to monitor the execution in real-time, displayingactions such as which articles were selected, what’s currently happening, andeven statistics like token usage.

TheTrade-Off: Flexibility vs. Complexity

While LangGraph offerssubstantial improvements in flexibility and control, it does come with asteeper learning curve. The ability to define global states, manage complexagent interactions, and create sophisticated logic chains gives developerslow-level control but also requires a deeper understanding of the system.

LangGraph marks asignificant evolution in the design and management of AI agents, offering apowerful, modular solution for complex workflows. For developers who needgranular control and detailed oversight of agent operations, LangGraph presentsa promising option. However, with great flexibility comes complexity, meaningdevelopers must invest time in learning the framework to fully leverage itscapabilities. That’s what we have done, making LangGraph our tool of choice forall complex GenAI solutions that need multiple agents working together.

BigHub is teaching LLMs to ReAct

Reason + Act = A Smarter AI

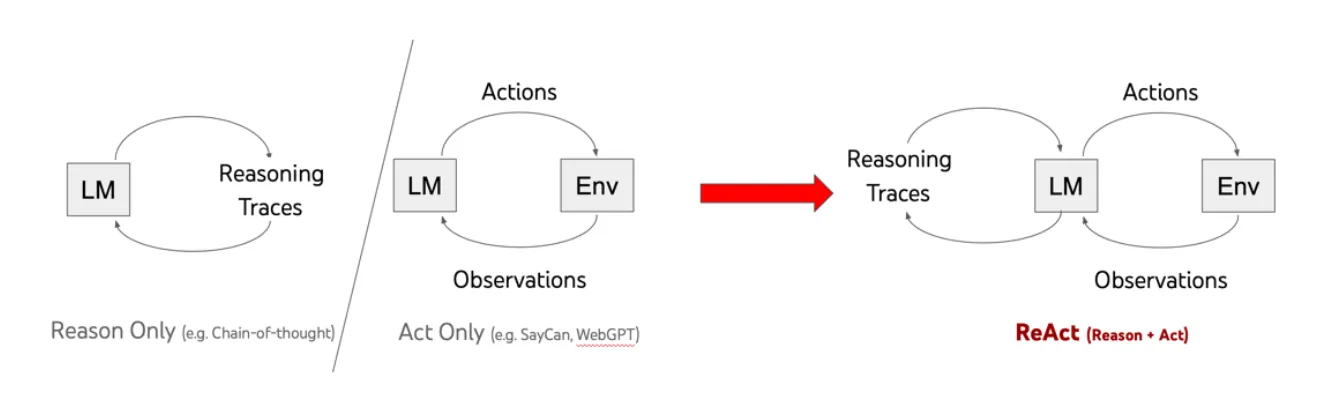

Originally proposed in a joint paper by Princeton University and Google, ReAct introduces a method that fuses two core capabilities of LLMs:

- Reasoning, often achieved through techniques like chain-of-thought prompting

- Acting, which involves generating and executing action plans (e.g., calling tools, retrieving data)

By combining these, LLMs are no longer just passive generators of text. They become agents capable of making decisions, interacting with environments, and refining their process as they work toward solving a task.

In practical terms, ReAct allows LLMs to structure and execute a sequence of operations — pulling in external data, making calculations, and iterating until a meaningful, actionable output is reached. It’s a game-changer in how we approach task automation.

LangChain ReAct: From Theory to Practice

At BigHub, we’re leveraging LangChain ReAct, one of the most accessible and powerful implementations of the ReAct paradigm. LangChain wraps the reasoning and acting logic into agent executors, enabling models to think step-by-step and interact with tools dynamically.

Let’s break it down with a fun — but technically accurate — example:

> Entering new AgentExecutor chain...

Query: "Find Leo DiCaprio's girlfriend and calculate her age raised to 0.43 power."

Action: Search

Action Input: "Leo DiCaprio girlfriend"

Observation: Camila Morrone

Thought: "Need to find Camila Morrone's age"

Action: Search

Action Input: "Camila Morrone's age"

Observation: 25 years

Thought: "Need to calculate 25 raised to the 0.43 power"

Action: Calculator

Action Input: 25^0.43

Observation: Answer: 3.991298452658078

Thought: "I now have the final answer"

Final Answer: Camila Morrone is Leo DiCaprio's girlfriend and her current age raised to the 0.43 power is 3.991298452658078.

> Finished chain.

This illustrates the model's ability to reason, act, and deliver a final answer — without human intervention between steps.

Why It Matters for Business

While the example above is playful, the business implications are profound. Take the insurance industry:

A LangChain ReAct-powered assistant could autonomously:

- Retrieve calculation formulas from internal knowledge bases

- Prompt users for missing inputs

- Perform real-time computations

- Deliver final results instantly

No hand-coded flows. No rigid scripts. Just dynamic, responsive, and intelligent interactions.

From automating customer service workflows to enabling deep analytical queries across datasets, ReAct opens the door to use cases across industries — finance, healthcare, logistics, legal, and beyond.

Elevate your AI game with LangChain: BigHub’s favorite new framework

LangChain: A Framework Built for the Future

LangChain is an open-source, modular framework designed to help developers harness the power of Large Language Models (LLMs). With support for multiple programming languages like Python and JavaScript, it's a flexible and accessible solution for building AI-driven apps that understand context, reason through problems, and act accordingly.

From the moment we first experimented with it, we saw how LangChain goes far beyond simple prompt-response applications. It enables agent-based systems — intelligent workflows that use reasoning, tool calling, and memory to accomplish complex tasks.

Why We Love It: Modularity, Integration, and Customization

Three things make LangChain stand out for us at BigHub:

- Ease of Integration – Plug it into existing systems quickly.

- Modular Design – Use only what you need, nothing more.

- High Customizability – Tailor it to fit specific business cases without rebuilding your stack.

LangChain’s structure allows businesses to evolve their AI capabilities without needing massive overhauls — ideal in today’s fast-moving tech environment.

Agents, Toolkits, and Use Cases

LangChain gives you the blueprint to build agents — smart components that combine reasoning with action. These agents can:

- Summarize documents

- Search databases

- Act as co-pilots in business workflows

- Power intelligent chatbots

- Answer complex queries with real-time data

Whatever the use case, LangChain's toolkit makes it easier to go from concept to prototype to production.

From Input to Insight: How LangChain Works

LangChain isn't just code — it’s a logical flow that mirrors human reasoning. Think of it like a dynamic flowchart, where each node represents a cognitive step: understanding the query, fetching relevant data, generating a prompt, and finally, crafting a response.

Here’s a simplified LangChain expression to illustrate this:

chain = (

{

"query_text": itemgetter("query_text"),

"chat_history": itemgetter("chat_history"),

"sources": {

"chat_history": itemgetter("chat_history")

}

| RunnableLambda(lambda x: create_prompt(x["chat_history"]))

| model_2

| RunnableLambda(search_azure_cognitive_search),

}

| RunnableLambda(lambda x: create_template_from_messages(x["chat_history"]))

| model

)

This isn’t just syntax — it’s a story. A structured process that makes AI responses more relevant, informed, and conversational.

BigHub recognized as a Microsoft Fabric Featured Partner

We've always focused on leveraging the latest technologies to deliver top-notch solutions. From the start, one of our most important partnerships has been with a tech leader in cloud and AI – Microsoft. This year has been full of exciting updates, from becoming a Microsoft Managed Partner to achieving the latest Fabric Featured Partner status.

What is Microsoft Fabric?

Earlier this year, Microsoft unveiled Microsoft Fabric in public preview – an exciting end-to-end analytics solution that combines Azure Data Factory, Azure Synapse Analytics, and Power BI. This all-in-one analytics powerhouse features seven core workloads seamlessly integrated into a single architecture, enhancing data governance with Microsoft Purview, simplifying data management with OneLake, and empowering users through Microsoft 365 integration. With advanced AI features like Copilot, Fabric boosts productivity and offers partners opportunities to create innovative, value-driven solutions, making data environments more secure, compliant, and cost-effective.

Being a Fabric Featured Partner means we’re skilled in using Fabric and ready to guide our clients through its implementation. Our team has undergone extensive training and with many clients where Fabric is already running, we can provide top-notch support and insights.

What this means for our customers

Microsoft Fabric is a comprehensive data platform designed to streamline your organization's data processes. By unifying various data operations, it significantly reduces the costs associated with operating, integrating, managing, and securing your data.

- Stay ahead with cutting-edge tech: Partnering with BigHub, you can access advanced features and updates that will keep your solutions state-of-the-art.

- Better support: Our close relationship with Microsoft, and access to their experts and resources, enables us to resolve even the trickiest issues quickly and solve everything correctly.

- Boosted productivity with AI: Using Fabric helps both our customers and our team to enhance productivity, and enjoy significantly faster data access and time-to-delivery.

8 years in the game: BigHub’s journey from startup to key player in applied AI

Back in 2016, two friends, Karel Šimánek and Tomáš Hubínek, decided to take a different path. They were passionate about artificial intelligence long before it became trendy, and despite everyone advising them against it, they stuck to their vision. The result? BigHub—a company specializing in advanced data solutions born out of their determination to do things their way.

Clients that trust us

Our unique journey and perseverance were recognized first abroad, with first clients from Germany, and the US, thanks to our cooperation with GoodData. But our domestic market quickly matured and we started to work for enterprise clients in Czechia and Slovakia. The first clients were mainly and quite surprisingly from the energy sector, ČEZ, E.ON, PPAS, and VSE. But we always saw the potential of AI technology for transforming every business. Our goal has always been to make AI accessible for all kinds of businesses, big or small.

While large enterprise clients are still our primary clientele, thanks to the enormous growth of opportunities that came with GPT, we've been opening up to small and medium-sized businesses too. Our GPT-based solutions offer clients better access to data analytics, powerful automation of back-office processes, and upgrade of their services - for a fraction of the price it would cost 8 years ago.

Wide recognition of our expertise

We’ve had some incredible milestones along the way, which helped BigHub to be recognized as a leader in applied AI. In 2021, BigHub ranked among the top ten fastest-growing tech companies in Central Europe in the prestigious Deloitte Technology Fast 50 CE ranking. Not bad for a company that started with just a few friends and a lot of ambition!

BigHub became widely recognized in 2023 when we won the Readers' Choice Award for the best business story in the EY Entrepreneur of the Year competition. Apart from the successes in awards, we gained recognition in prestigious outlets like Forbes, Hospodářské noviny, CzechCrunch, and EURO.cz.

Both of our founders have shared their expertise on major platforms like CNN Prima NEWS, where they provided valuable tips on spotting and protecting against deepfake and how to spot them, and in a series of interviews for Roklen24, where Karel Šimánek and Tomáš Hubínek discussed their insights on AI and the evolving digital landscape.

Our story shows how following your vision pays off, even when nobody else on the market sees it through.

Business growth

We built the BigHub on a strong team, friendly culture, and meaningful work. These foundations have helped us to grow sustainably over the years, growing approximately 20+ percent each year. This year, we’ll reach the big milestone of CZK 100 million in revenue.

We’ve evolved from offering custom-tailored projects to scalable products that still allow for client customization. It’s about finding that sweet spot between prefabricated solutions and personalized service, like automating insurance claim processes to save time for our clients.

Our growth is based on the smart and motivated people we have the privilege to work with daily. Our team now consists of 46 amazing individuals. Whether it’s football matches, cycling trips, or summer parties, we succeeded in building and maintaining a culture that turns colleagues into lifelong friends.

We’ve always believed that education is key to staying ahead in such a fast-moving industry. That's why we not only work hard for our clients but also focus on our development. We regularly host internal workshops and hackathons, where our team can learn new upcoming technologies as well as come up with fresh ideas and innovative applications.

Acknowledged Partners

None of this would be possible without the support of our great partners. Microsoft has been a key technology vendor for many of our enterprise clients, and our partnership with them continues to thrive as our business grows. With time our partnership deepened and we are proud to gain multiple statuses - Microsoft Managed Partner, Microsoft Solution Partner for Data & AI on Azure, or the latest Fabric Featured Partner, to name but a few.

As our client base has expanded, we’ve become technology agnostic, working seamlessly with all the major platforms and gaining their partnerships as well, including AWS, Google Cloud, and Databricks. These partnerships are just another proof of our team's hard work and their never-ending will to learn. At the same time, proficiency in all the major technologies helps us to pick and deliver the very best solutions to our clients.

Giving back

Our connection to CTU – our alma mater – remains strong. For the past three years, we’ve been teaching a course on Applied Data Analysis, and this year we’re excited to launch a new follow-up course on Big Data tools and architecture. We sponsor student activities and provide both financial and non-financial support to initiatives like the Hlávka Foundation. We’re proud to say that our commitment to giving back remains unwavering. Whether it’s supporting the Data Talk community as a proud partner of the Data Mesh meetup for all the data enthusiasts out there, teaching, or mentoring students, we’re always looking for ways to inspire and contribute to the next generation of tech talent.

BigHub beyond the big puddle

This year we are strengthening our US presence! It represents a significant step forward in expanding our global reach, with a dedicated sales representative starting in October 2023 and multiple US clients already on board. It’s a bold move, and we couldn’t be more proud of the incredible team that made it happen. This is a big step for us in sharing our AI and data expertise with the largest market in the world!

As we look ahead, we’re more excited than ever for what the future holds and the new opportunities to innovate, grow, and give back. Our journey just started, and what a ride it has been so far!

BigHack: Turning real challenges into AI solutions

Pushing Boundaries with Generative AI

Artificial intelligence has always been in our DNA. Long before ChatGPT made headlines, we were using early GPT models for tasks like analyzing customer reviews. But the new wave of generative models has fundamentally changed what's possible. To keep up with — and get ahead of — this rapidly evolving space, we launched an internal initiative: BigHack, a hands-on hackathon focused on generative AI and its real-world applications.

We didn’t want to experiment in a vacuum. Instead, we chose real client challenges, making sure our teams were tackling scenarios that reflect the current business environment. The goal? Accelerate learning, build practical experience, and surface innovative AI-powered solutions for tangible problems.

Secure and Scalable: Choosing the Right Platform

One key consideration was how to work with these models in a secure and scalable way. While OpenAI’s public services have sparked mainstream adoption, they come with serious concerns around data privacy and usage. Many corporations have responded by banning them entirely.

That’s why we opted for Microsoft’s Azure OpenAI Service. It allows us to leverage the same powerful models while ensuring enterprise-grade data governance. With Azure, all data remains within the client’s control and is not used for model training. Plus, setting up the infrastructure was faster than ever — minutes instead of days compared to older on-prem solutions.

From Ideas to Prototypes: Two Real Projects

We selected two project ideas from a larger pool of discussions and got to work. With full team involvement and the power of the cloud, we built two working prototypes that could easily transition into production.

1. “Ask Your Data” App

This application enables business users to query analytical data using natural language, removing the dependency on data analysts for routine questions like:

- “What’s our churn rate?”

- “Where can we cut IT costs?”

By connecting directly to existing data sources, the app delivers answers in seconds, democratizing access to insights.

2. Intelligent Internal Knowledge Access

Our second prototype tackled unstructured internal documentation — especially regulatory and policy content. We built a system that allows employees to ask free-form questions and get accurate responses from dense, often chaotic documentation.

This solution is built on two key pillars:

- Automated Reporting: Eliminates the need for complex dashboard setups by using AI to interpret well-governed data and generate reports based on simple queries.

- Regulatory Q&A: Helps users instantly find information buried in hundreds of pages of internal compliance or legal documents.

How Our AI System Fights Against Frauds in International Shipping

BigHub has a longstanding partnership with a major international logistics firm, during which it has successfully implemented a diverse range of data projects. These projects have encompassed a variety of areas, including data engineering, real-time data processing, cloud and machine learning-based applications, all of which have been designed and developed to enhance the logistics company's operations, including warehouse management, supply chain optimization and the transportation of thousands of packages globally on a daily basis.

In 2022, BigHub was presented with a new challenge: to aid in the implementation of a system for the early detection of suspicious fraudulent shipments entering the company's logistic network. Based on the client's pilot solution, which had been developed and tested using historical data, BigHub improved the algorithms and deployed them in a production environment for real-time evaluation of shipments as they entered the transportation network. The initial pilot solution was based on batch evaluation, but the requirement for our team was to create a REST API that could handle individual queries with a response time of less than 200 milliseconds. This API would be connected to the client's network, where further operations would be carried out on the data.

.webp)

The proposed application is designed with a high-level architecture, as illustrated in the accompanying diagram. The core of the system is the REST API, which is connected to the client's network to receive and process queries. These queries are subject to validation and evaluation, with the results then returned to the end user. The data layer serves as the foundation for the calculations, as well as for the training of models and pre-processing of feature tables. The evaluation results are also stored in the data layer to facilitate the production of summary analyses in the reporting layer. The MLOps layer manages the lifecycle of the machine learning model, including training, validation, storage of metrics for each model version and making the current version of the model accessible via the REST API. To achieve this, the whole solution leverages a variety of modern data technologies, including Kubernetes, MLFlow, AirFlow, Teradata, Redis and Tableau.

During the development of the system our team needed to address several challenges that include:

- Setup and scaling of the REST API to handle a high volume of queries (260 queries from 30 parallel resources per second) in real-time, ensuring it is ready for global deployment.

- Optimizing the evaluation speed of individual queries, through the use of low-level programming techniques, to reduce the time from hundreds of milliseconds to tens of milliseconds.

- Managing the machine learning model lifecycle, including automated retraining, deployment of new versions into API, monitoring of quality and notifications, to ensure reliable long-term performance.

- Implementing modifications on the run - our agile approach ensured flexibility and allowed quick and successful changes to the ongoing project for the satisfaction of both parties and better results.

BigHub is a proud DATA mesh partner

An informal regular meetup full of latest data gossip where you can attend talks from anybody who loves data - from legendary startup founders to junior data enthusiasts. We’re talking about the DATA Mesh meetup, of which we are a partner.

"We were looking for a place where we could meet informally to discuss and share experience from interesting projects with each other. That's why we decided to do regular data meetups," says Karel Šimánek, CEO of BigHub and one of the organizers and founders of DATA Mesh.

The meetup is held every month at the K7 club in Prague's Vršovice and visitors can enjoy short but inspiring presentations from various fields with people who have real experience with data and ML applications. Of course, there is also an afterparty, where you can make great contacts, and play the legendary Atomic Bomberman game for awesome prizes.

In total, there have been five meetups already with guests like Sara Polak, Jan Kučera from Datamole, Lukáš Jelínek from Pocket Virtuality or Jan Šindera from Nano energies.

Get your first consultation free

Want to discuss the details with us? Fill out the short form below. We’ll get in touch shortly to schedule your free, no-obligation consultation.

.avif)