BigHub Blog - Read, Discover, Get inspired.

The latest industry news, interviews, technologies, and resources.

Why MCP might be the HTTP of the AI-first era

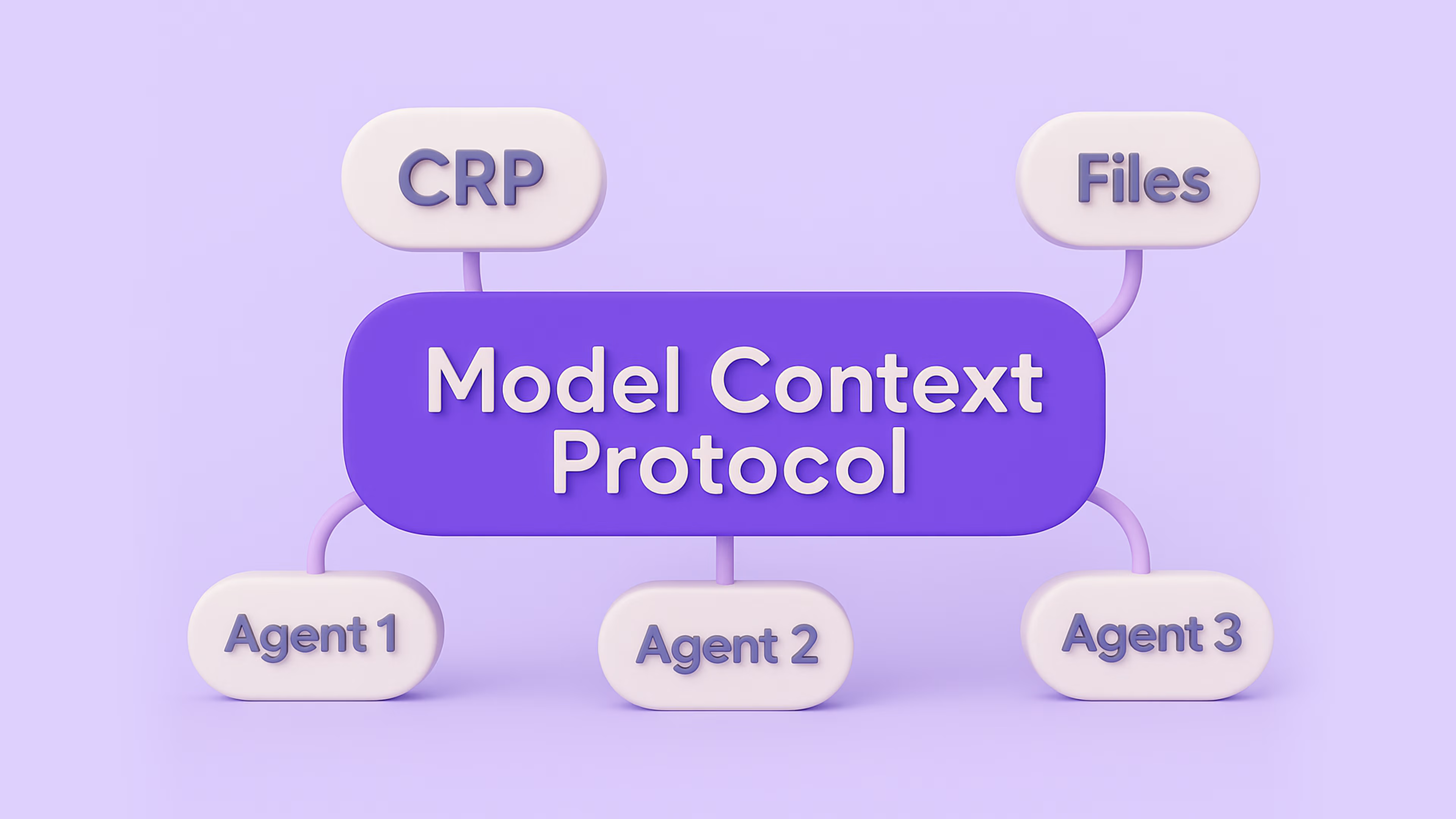

What Is MCP – and Why Should You Care?

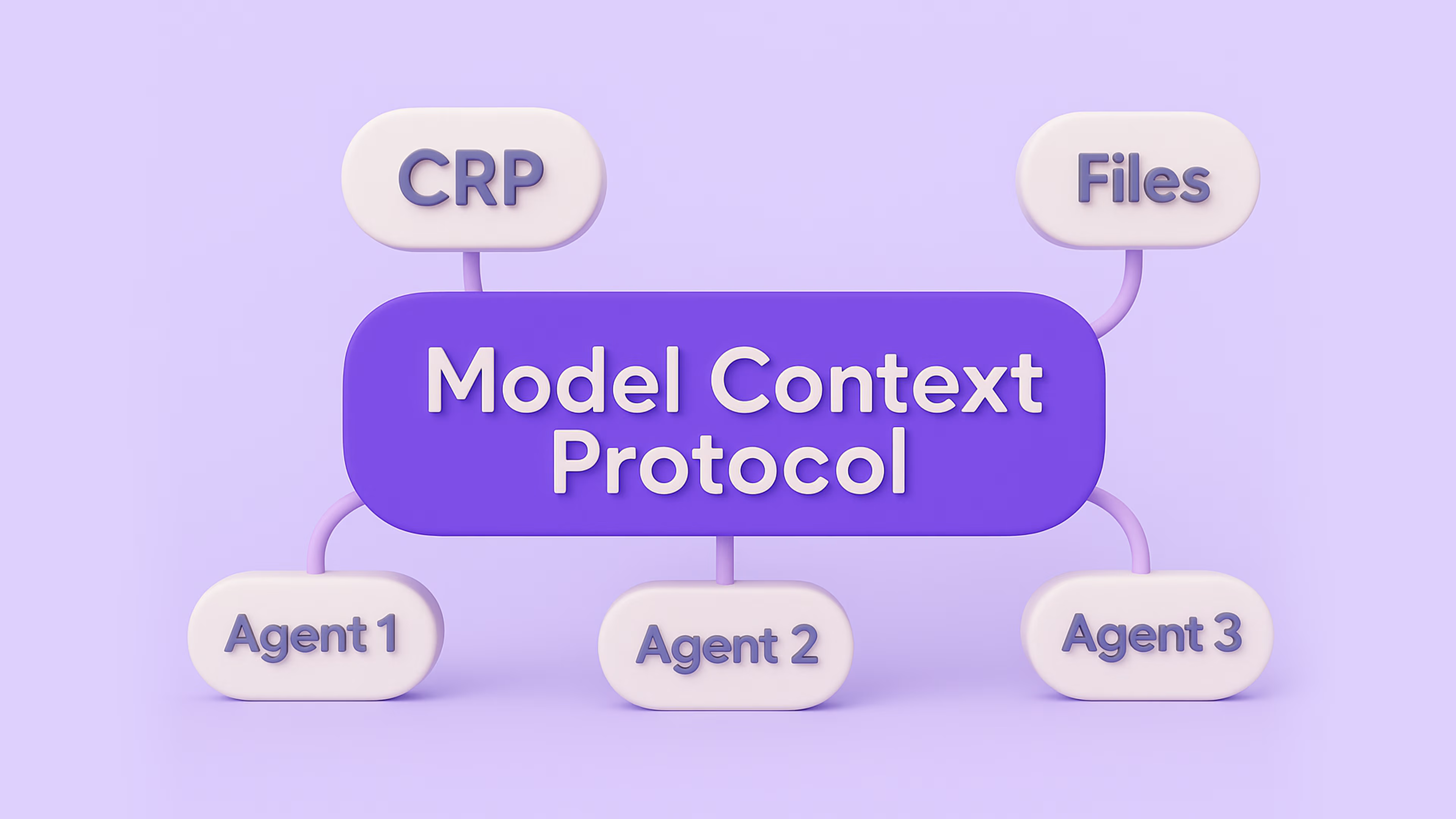

Model Context Protocol may sound like something out of an academic paper or internal Big Tech documentation. But in reality, it’s a standard that enables different AI systems to seamlessly communicate—not just with each other, but also with APIs, business tools, and humans.

Today’s AI tools—whether chatbots, voice assistants, or automation bots—are typically limited to narrow tasks and single systems. MCP changes that. It allows intelligent systems to:

- Check your e-commerce order status

- Review your insurance contract

- Reschedule your doctor’s appointment

- Arrange delivery and payment

All without switching apps or platforms. And more importantly: without every company needing to build its own AI assistant. All it takes is making services and processes “MCP-accessible.”

From AI as a Tool to AI as an Interface

Until now, AI in business has mostly served as a support tool for employees—helping with search, data analysis, or faster decision-making. But MCP unlocks a new paradigm:

Instead of building AI tools for internal use, companies will expose their services to be used by external AI systems—especially those owned by customers themselves.

That means the customer is no longer forced to use the company’s interface. They can interact with your services through their own AI assistant, tailored to their preferences and context. It’s a fundamental shift. Just as the web changed how we accessed information, and mobile apps changed how we shop or travel, MCP and intelligent interfaces will redefine how people interact with companies.

The AI-First Era Is Already Here

It wasn’t long ago that people began every query with Google. Today, more and more users turn first to ChatGPT, Perplexity, or their own digital assistant. That shift is real: AI is becoming the entry point to the digital world.

“Web-first” and “mobile-first” are no longer enough. We’re entering an AI-first era—where intelligent interfaces will be the first layer that handles requests, questions, and decisions. Companies must be ready for that.

What This Means for Companies

1. No More Need to Build Your Own Chatbot

Companies spend significant resources building custom chatbots, voice systems, and interfaces. These tools are expensive to maintain and hard to scale.

With MCP, the user shows up with their own AI system and expects only one thing: structured access to your services and information. No need to worry about UX, training models, or customer flows—just expose what you do best.

2. Traditional Call Centers Become Obsolete

Instead of calling your support line, a customer can query their AI assistant, which connects directly to your systems, gathers answers, or executes tasks.

No queues. No wait times. No pressure on your staffing model. Operations move into a seamless, automated ecosystem.

3. New Business Models and Brand Trust

Because users will bring their own trusted digital interface, companies no longer carry the burden of poor chatbot experiences. And thanks to MCP’s built-in structure for access control and transparency, businesses can decide who sees what, when, and how—while building trust and reducing risks.

What This Means for Everyday Users

- One interface for everything

- No more juggling dozens of logins, websites, or apps. One assistant does it all.

- True autonomy

- Your digital assistant can order products, compare options, request refunds, or manage appointments—no manual effort required.

- Smarter, faster decisions

- The system knows your preferences, history, and goals—and makes intelligent recommendations tailored to you.

Practical example:

You ask your AI to generate a recipe, check your pantry, compare prices across online grocers, pick the cheapest options, and schedule delivery—all in one go, no clicking required.

The Underrated Challenge: Data

For this to work, users will need to give their AI systems access to personal data. And companies will need to open up parts of their systems to the outside world. That’s where trust, governance, and security become mission-critical. MCP provides a standardized framework for managing access, ensuring safety, and scaling cooperation between systems—without replicating sensitive data or creating silos.

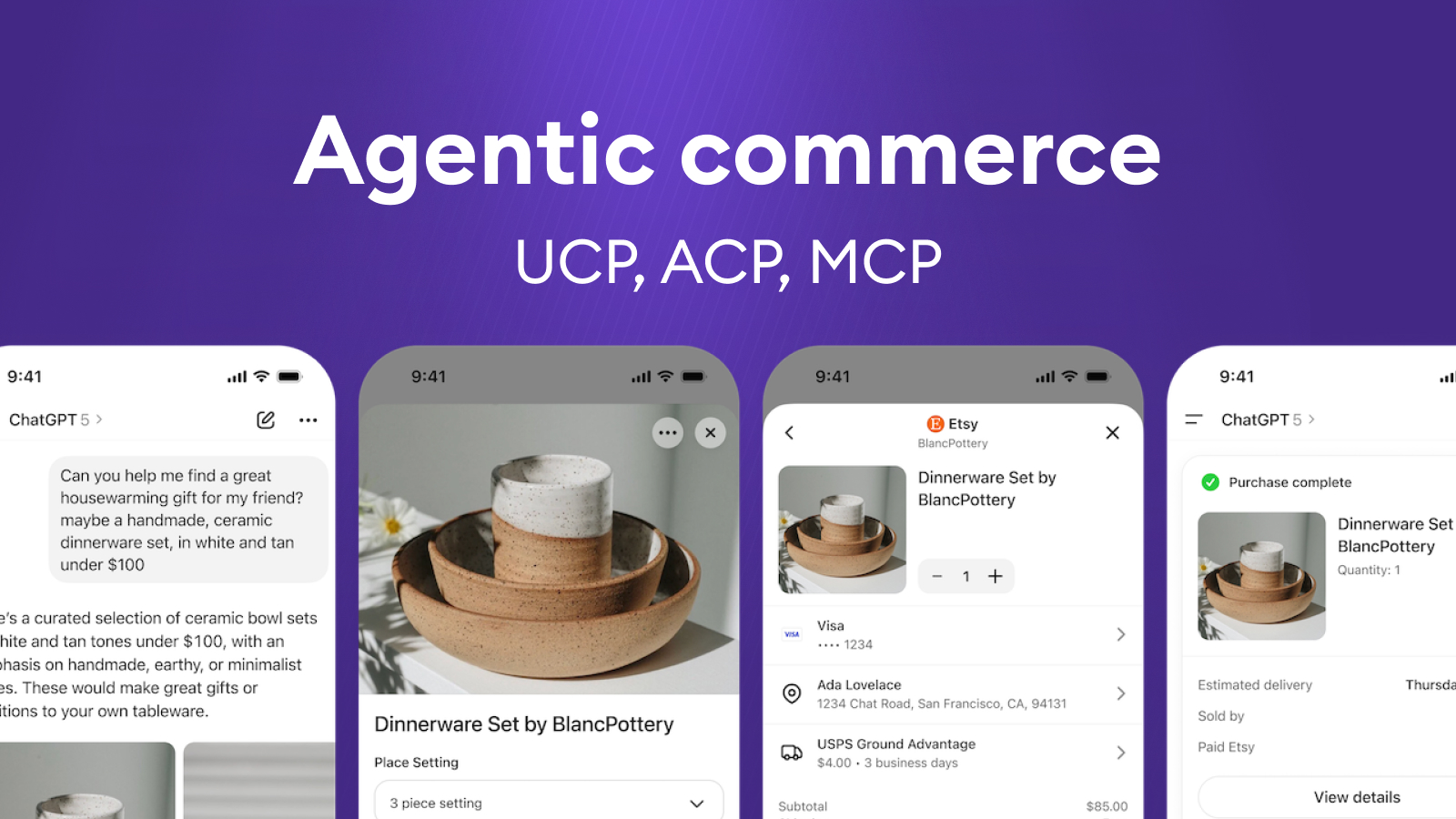

UCP, ACP, MCP in Agentic Commerce: AI is moving from “recommending” to “doing”

Where are we now

Over the last few months, several important things have happened in AI and e-commerce:

- Google introduced UCP (Universal Commerce Protocol) – an open standard for agentic commerce that unifies how an AI agent talks to a merchant: catalogue, cart, shipping, payment, order.

- OpenAI and Stripe launched ACP (Agentic Commerce Protocol) – a protocol for agentic checkout in the ChatGPT ecosystem.

- At the same time, MCP (Model Context Protocol) has emerged as a general way for agents to call tools and services, and OpenAI Apps SDK as a product/distribution layer for agent apps.

In other words, the internet is starting to define standardised “rails” for how AI agents will shop. And the market is shifting from “AI recommends” to “AI actually executes the transaction”.

In this article, we look at:

- what agentic commerce means in practice,

- how UCP, MCP, Apps SDK and ACP fit together,

- what these standards solve – and what they very intentionally don’t solve,

- and where custom agentic commerce makes sense – the exact type of work we do at BigHub.

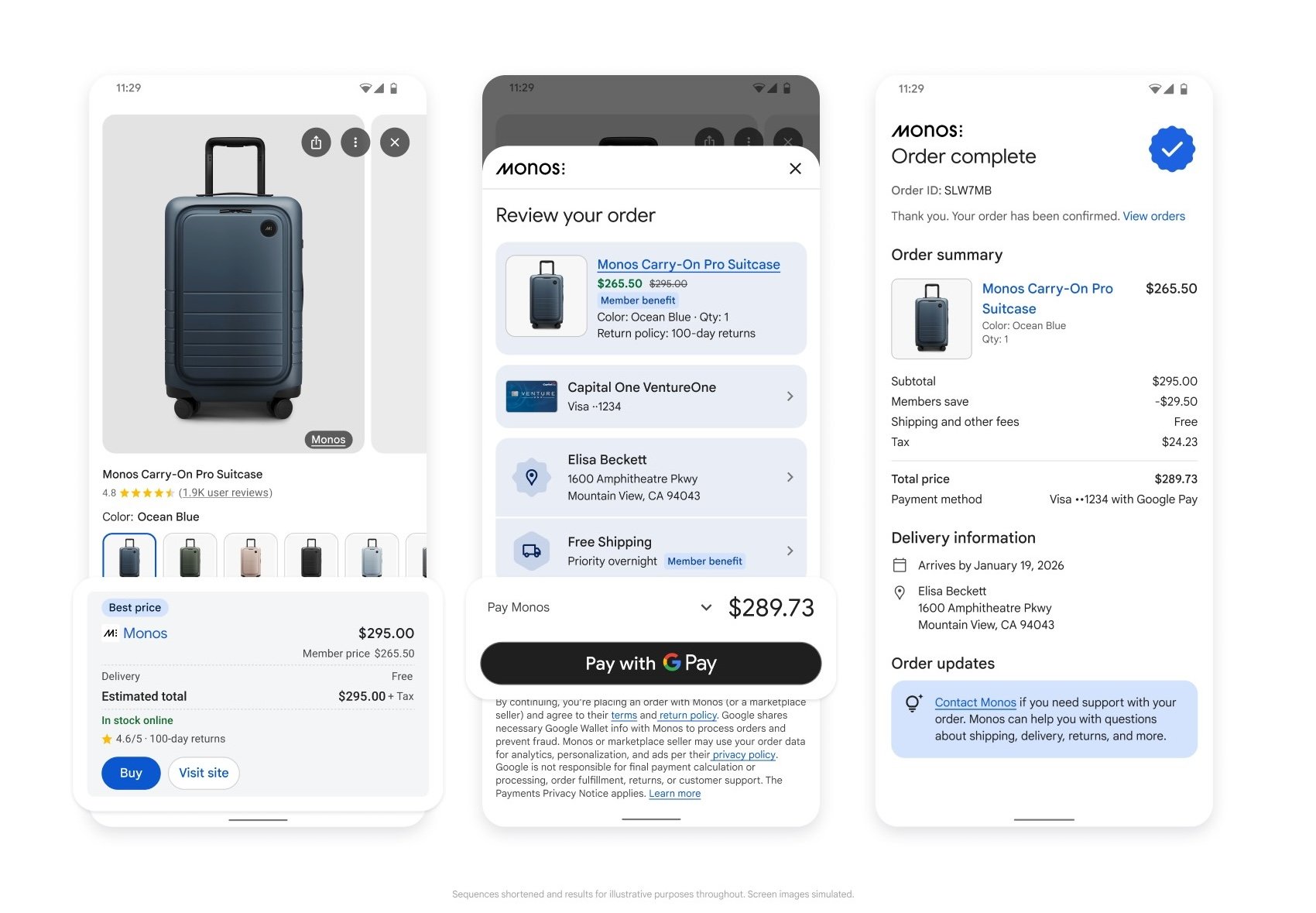

What Is Agentic commerce

Agentic commerce is a shopping flow where an AI agent handles part or all of the process on behalf of a person or a business – from discovery and comparison to payment.

A typical scenario:

“Find me marathon running shoes under $150 that can be delivered within two weeks.”

The agent then:

- understands the request,

- queries multiple merchants,

- compares parameters, reviews, prices and delivery options,

- builds a shortlist,

- and, once the user approves, completes the purchase – ideally without the user ever touching a traditional web checkout.

This doesn’t only apply to B2C. Similar patterns show up in:

- internal procurement,

- B2B ordering,

- recurring replenishment,

- service and returns flows.

The direction is clear, AI is moving from “help me choose” to “get it done for me”.

MCP, Apps SDK, UCP and ACP

It’s useful to see today’s stack as layers.

MCP (Model Context Protocol) is:

- a general standard for how an agent calls tools, APIs and services,

- domain-agnostic (“I can talk to CRM, pricing, catalogue, ERP, …”),

- effectively the way the agent “sees” the world – through capabilities it can invoke.

In short: MCP = how the agent reaches into your systems.

OpenAI Apps SDK:

- provides UI, runtime and distribution for agents (ChatGPT Apps, user-facing interface),

- lets you quickly wrap an agent into a usable product:

- chat, forms, actions,

- distribution inside the ChatGPT ecosystem,

- basic management and execution.

In short: Apps SDK = how you turn an agent into a product people actually use.

UCP – Domain standard for commerce workflows

UCP (Universal Commerce Protocol) from Google and partners:

- is a domain-specific standard for commerce,

- unifies how an agent talks to a merchant about:

- catalogue, variants, prices,

- cart, shipping, payment, order,

- discounts, loyalty, refunds, tracking and support,

- is designed to work across Google Search, Gemini and other AI surfaces.

In short: UCP = the concrete language and workflow of buying.

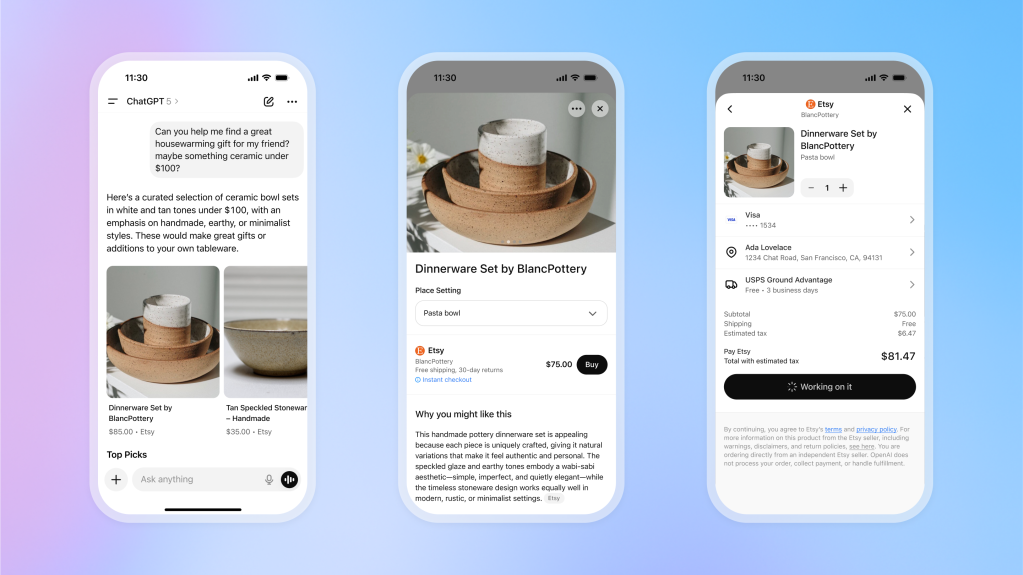

ACP – Agentic checkout in the ChatGPT ecosystem

ACP (Agentic Commerce Protocol) from OpenAI/Stripe:

- targets a similar domain from the ChatGPT side,

- focuses strongly on checkout, payments and orders,

- powers features like Instant Checkout in ChatGPT.

From a merchant’s point of view, UCP and ACP are competing commerce standards (no one wants three different protocols in their stack).

From an architecture point of view, they can coexist as different dialects an agent uses depending on the channel (ChatGPT vs. Google / Gemini).

What these standards do – and what they don’t

The common pattern is important. UCP and ACP do not make agents “smart”. They just give them a consistent language.

These standards typically cover:

- how the agent formally communicates with the merchant and checkout,

- how offers and orders are structured,

- how payment and authorisation are handled securely,

- how a purchase can flow across different AI channels.

They do not (and cannot) solve:

- the quality and structure of your product catalogue, attributes and availability,

- integration into ERP, WMS/OMS, CRM, loyalty, pricing engine, campaign tooling,

- your business logic – margin vs. SLA vs. customer experience vs. revenue,

- governance, risk, approvals – who is allowed to order what, when a human must step in, how decisions are audited.

Practically, this means:

- you can be formally “UCP/ACP-ready”,

- and still deliver a poor agent experience if:

- data is inconsistent,

- delivery promises can’t be kept,

- pricing and promo logic breaks in a multi-channel world,

- the agent has no access to real-time states and internal rules.

The standard is a necessary technical minimum, not a finished solution.

How we approach Agentic Commerce at BigHub

At BigHub, we see UCP, MCP, ACP and Apps SDK as infrastructure building blocks. On real projects, we focus on what creates actual competitive advantage on top of them.

We build ML-powered commerce agents that can:

- optimise dynamic offers and pricing (bundles, alternatives, smart trade-offs based on margin, SLA and priorities),

- deliver personalised search and shortlists (customer context, preferences, budget, interaction history),

- handle argumentation and objections (why this option, what are the alternatives, explain the trade-offs),

- and only then smoothly push the checkout over the finish line.

On top of that, we add an integration layer via MCP (capabilities + connections to core systems). For UI and distribution, we often use OpenAI Apps SDK when we need to get an agent in front of real users quickly. Where it makes sense, we plug into standards like UCP/ACP instead of writing bespoke integrations for every single channel.

Where custom Agentic Commerce makes the difference

Standards (UCP/ACP/MCP) are extremely valuable where:

- you don’t want to invent your own protocol for connecting to AI channels,

- you need interoperability (ChatGPT, Google/Gemini, others),

- you want to reduce integration overhead for merchants.

A custom approach adds the most value in these areas:

1) Connecting the agent to core systems

- ERP, WMS/OMS, CRM, loyalty, pricing, returns, contact centre…

- the agent must live in your real operational architecture, not a demo sandbox.

Typically you need a dedicated integration and orchestration layer that:

- speaks UCP/ACP/MCP “upwards”,

- speaks your specific systems and APIs “downwards”.

2) Domain logic and business Rules

This is where competitive advantage is created:

- when the agent can execute autonomously vs. when it should only recommend,

- how it balances margin, SLA, availability, customer experience and revenue,

- how it works with promotions, loyalty, cross-sell / up-sell scenarios.

This is not a protocol question. It’s about concrete rules on top of your data and KPIs.

3) Multi-channel and the mix of B2C / B2B / Internal agents

Real-world commerce looks like this:

- B2C webshop,

- B2B ordering portal,

- internal purchasing agent,

- in-store sales assistant,

- customer service agent.

A custom framework lets you:

- share logic across roles and channels,

- respect permissions and limits,

- support flows like “AI starts in chat, finishes in the store”.

4) European context: Regulation, security, Data residency

For European companies, several constraints matter:

- regulation (EU AI Act, GDPR, sector-specific rules),

- internal security posture, audits, risk controls,

- where data and models actually run (US vs. EU),

- how explainable and auditable agent decisions are.

Standards are global, but architecture and governance have to be local and tailored.

What retailers and enterprises should take away from UCP (and other similar protocols)

If you’re thinking about agentic commerce, it’s worth asking a few practical questions:

- Are we “agent-ready” not only at the protocol level, but also in terms of data and processes?

- In which use cases do we actually want the agent to execute the transaction – and where should it stay at the recommendation level?

- How will agentic commerce fit into our existing systems, pricing, campaigns and SLAs?

- Who owns agent initiatives internally (KPI, P&L) and how will we measure success?

- Which parts make sense to solve via standards (UCP/ACP/MCP) and where do we already need a custom agent framework?

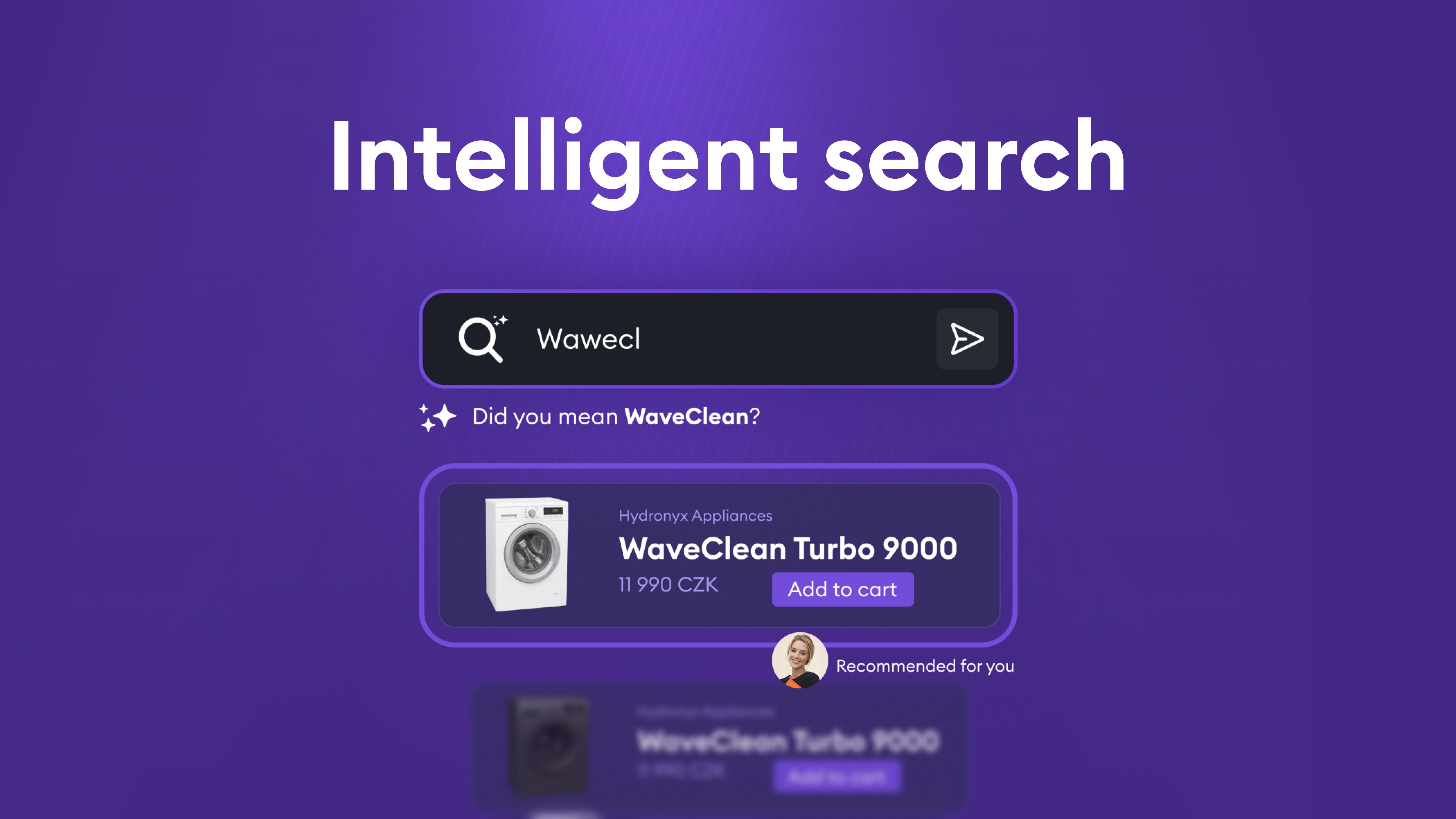

How to build intelligent search: From full-text to optimized hybrid search

The problem: Limits of traditional search

Classic full-text search based on algorithms like BM25 has several fundamental constraints:

1. Typos and variants

- Users frequently submit queries with typos or alternate spellings.

- Traditional search expects exact or near-exact text matches.

2. Title-only searching

- Full-text search often targets specific fields (e.g., product or entity name).

- If relevant information lives in a description or related entities, the system may miss it.

3. Missing semantic understanding

- The system doesn’t understand synonyms or related concepts.

- A query for “car” won’t find “automobile” or “vehicle,” even though they are the same concept.

- Cross-lingual search is nearly impossible—a Czech query won’t retrieve English results.

4. Contextual search

- Users often search by context, not exact names.

- For example, “products by manufacturer X” should return all relevant products, even if the manufacturer name isn’t explicitly in the query.

The solution: Hybrid search with embeddings

The remedy is to combine two approaches: traditional full-text search (BM25) and vector embeddings for semantic search.

Vector embeddings for semantic understanding

Vector embeddings map text into a multi-dimensional space where semantically similar meanings sit close together. This enables:

- Meaning-based retrieval: A query like “notebook” can match “laptop,” “portable computer,” or related concepts.

- Cross-lingual search: A Czech query can find English results if they share meaning.

- Contextual search: The system captures relationships between entities and concepts.

- Whole-content search: Embeddings can represent the entire document, not just the title.

Why embeddings alone are not enough

Embeddings are powerful, but not sufficient on their own:

- Typos: Small character changes can produce very different embeddings.

- Exact matches: Sometimes we need precise string matching, where full-text excels.

- Performance: Vector search can be slower than optimized full-text indexes.

A hybrid approach: BM25 + HNSW

The ideal solution blends both:

- BM25 (Best Matching 25): A classic full-text algorithm that excels at exact matches and handling typos.

- HNSW (Hierarchical Navigable Small World): An efficient nearest-neighbor algorithm for fast vector search.

Combining them yields the best of both worlds: the precision of full-text for exact matches and the semantic understanding of embeddings for contextual queries.

The challenge: Getting the ranking right

Finding relevant candidates is only step one. Equally important is ranking them well. Users typically click the first few results; poor ordering undermines usefulness.

Why simple “Sort by” is not enough

Sorting by a single criterion (e.g., date) fails because multiple factors matter simultaneously:

- Relevance: How well the result matches the query (from both full-text and vector signals).

- Business value: Items with higher margin may deserve a boost.

- Freshness: Newer items are often more relevant.

- Popularity: Frequently chosen items may be more interesting to users

Scoring functions: Combining multiple signals

Instead of a simple sort, you need a composite scoring system that blends:

- Full-text score: How well BM25 matches the query.

- Vector distance: Semantic similarity from embeddings.

- Scoring functions, such as:

- Magnitude functions for margin/popularity (higher value → higher score).

- Freshness functions for time (newer → higher score).

- Other business metrics as needed.

The final score is a weighted combination of these signals. The hard part is that the right weights are not obvious—you must find them experimentally.

Hyperparameter search: Finding optimal weights

Tuning weights for full-text, vector embeddings, and scoring functions is critical to result quality. We use hyperparameter search to do this systematically.

Building a test dataset

A good test set is the foundation of successful hyperparameter search. We assemble a corpus of queries where we know the ideal outcomes:

- Reference results: For each test query, a list of expected results in the right order.

- Annotations: Each result labeled relevant/non-relevant, optionally with priority.

- Representative coverage: Include diverse query types (exact matches, synonyms, typos, contextual queries).

Metrics for quality evaluation

To objectively judge quality, we compare actual results to references using standard metrics:

1. Recall (completeness)

- Do results include everything they should?

- Are all relevant items present?

2. Ranking quality (ordering)

- Are results in the correct order?

- Are the most relevant results at the top?

Common metrics include NDCG (Normalized Discounted Cumulative Gain), which captures both completeness and ordering. Other useful metrics are Precision@K (how many relevant items in the top K positions) and MRR (Mean Reciprocal Rank), which measures the position of the first relevant result.

Iterative optimization

Hyperparameter search proceeds iteratively:

- Set initial weights: Start with sensible defaults.

- Test combinations: Systematically vary:

- Field weights for full-text (e.g., product title vs. description).

- Weights for vector fields (embeddings from different document parts).

- Boosts for scoring functions (margin, recency, popularity).

- Aggregation functions (how to combine scoring functions).

- Evaluate: Run the test dataset for each combination and compute metrics.

- Select the best: Choose the parameter set with the strongest metrics.

- Refine: Narrow around the best region and repeat as needed.

This can be time-consuming, but it’s essential for optimal results. Automation lets you test hundreds or thousands of combinations to find the best.

Monitoring and continuous improvement

Even after tuning, ongoing monitoring and iteration are crucial.

Tracking user behavior

A key signal is whether users click the results they’re shown. If they skip the first result and click the third or fourth, your ranking likely needs work.

Track:

- CTR (Click-through rate): How often users click.

- Click position: Which rank gets the click (ideally the top results).

- No-click queries: Queries with zero clicks may indicate poor results.

Analyzing problem cases

When you find queries where users avoid the top results:

- Log these cases: Save the query, returned results, and the clicked position.

- Diagnose: Why did the system rank poorly? Missing relevant items? Wrong ordering?

- Augment the test set: Add these cases to your evaluation corpus.

- Adjust weights/rules: Update weights or introduce new heuristics as needed.

This iterative loop ensures the system keeps improving and adapts to real user behavior.

Implementing on Azure: AI search and OpenAI embeddings

All of the above can be implemented effectively with Microsoft Azure.

Azure AI Search

Azure AI Search (formerly Azure Cognitive Search) provides:

- Hybrid search: Native support for combining full-text (BM25) and vector search.

- HNSW indexes: An efficient HNSW implementation for vector retrieval.

- Scoring profiles: A flexible framework for custom scoring functions.

- Text weights: Per-field weighting for full-text.

- Vector weights: Per-field weighting for vector embeddings.

Scoring profiles can combine:

- Magnitude scoring for numeric values (margin, popularity).

- Freshness scoring for temporal values (created/updated dates).

- Text weights for full-text fields.

- Vector weights for embedding fields.

- Aggregation functions to blend multiple scoring signals.

OpenAI embeddings

For embeddings, we use OpenAI models such as text-embedding-3-large:

- High-quality embeddings: Strong multilingual performance, including Czech.

- Consistent API: Straightforward integration with Azure AI Search.

- Scalability: Handles high request volumes.

Multilingual capability makes these embeddings particularly suitable for Czech and other smaller languages.

Integration

Azure AI Search can directly use OpenAI embeddings as a vectorizer, simplifying integration. Define vector fields in the index that automatically use OpenAI to generate embeddings during document indexing.

Microsoft Ignite 2025: The shift from AI experiments to enterprise-grade agents

1. AI agents move centre stage

Microsoft’s headline reveal, Agent 365, positions AI agents as the new operational layer of the digital workplace. It provides a central hub to register, monitor, secure, and coordinate agents across the organisation.

At the same time, Microsoft 365 Copilot introduced dedicated Word, Excel, and PowerPoint agents, capable of autonomously generating, restructuring, and analysing content based on business context.

Why this matters

Enterprises are shifting from “asking AI questions” to “assigning AI work”. Agent-based architectures will gradually replace many single-purpose assistants.

What organisations can do

- Identify workflows suitable for autonomous agents

- Standardise agent behaviour and permissions

- Start pilot deployments inside Microsoft 365 ecosystems

2. Integration and orchestration become non-negotiable

Microsoft emphasised interoperability through the Model Context Protocol (MCP). Agents across Teams, Microsoft 365, and third-party apps can now share context and execute coordinated multi-step workflows.

Why this matters

Real automation requires more than standalone copilots — it requires orchestration between tools, data sources, and departments.

What organisations can do

- Map cross-app workflows

- Connect productivity, CRM/ERP and operational platforms

- Design agent ecosystems rather than isolated assistants

3. Governance and security move into the spotlight

As agents gain autonomy, Microsoft introduced governance capabilities such as:

- visibility into permissions

- behavioural monitoring

- integration with Defender, Entra, and Purview

- centralised policy control

- data-loss prevention

Why this matters

AI at scale must be fully observable and compliant. Governance will become a foundational requirement for all agent deployments.

What organisations can do

- Define who is allowed to create/modify agents

- Establish audit and monitoring standards

- Build guardrails before rolling out automation

Read the official Microsoft article with all security updates & news - Link

4. Windows, Cloud PCs, and the rise of the AI-enabled workspace

Microsoft presented Windows 11 and Windows 365 as key components of the AI-first workplace. Features include:

- AI-enhanced Cloud PCs

- support for shared and frontline devices

- local agent inference on capable hardware

- endpoint-level automation

Why this matters

Distributed teams gain consistent, secure work environments with native AI capabilities.

What organisations can do

- Evaluate Cloud PC scenarios

- Modernise workplace setups for agent-driven workflows

- Explore AI-enabled devices for operational teams

5. AI infrastructure and Azure evolution

Ignite highlighted continued investment in Azure AI capabilities, including:

- improved model hosting and versioning

- hybrid CPU/GPU inference

- faster deployment pipelines

- more cost-efficient fine-tuning

- enhanced governance for AI training data

Full report here - Link

Why this matters

Scalable data pipelines and model infrastructure remain essential foundations for any agent-driven environment.

What organisations can do

- Update data architecture for AI-readiness

- Implement vector indexing and retrieval pipelines

- Optimise model hosting costs

6. Copilot Studio and plug-in ecosystem expand rapidly

Copilot Studio received major updates, transforming it into a central automation and integration hub. New capabilities include:

- custom agent creation with visual logic

- no-code multi-step workflows

- plug-ins for internal APIs and line-of-business systems

- improved grounding using enterprise data

- expanded connectors for CRM/ERP/event platforms

Why this matters

Organisations can build specialised copilots and agents — connected to their internal systems and business logic.

What organisations can do

- Develop domain-specific copilots

- Use connectors to integrate existing systems

- Leverage visual logic for quick experiments

7. Fabric + Azure AI integration

Microsoft Fabric now provides deeper AI readiness features:

- tight integration with Azure AI Studio

- automated pipelines for AI data preparation

- vector indexing and RAG capabilities inside OneLake

- enhanced lineage and governance

- performance boosts for large-scale analytics

Why this matters

AI agents depend on clean, governed, real-time data. Microsoft states that Fabric now enables building unified data + AI environments more efficiently.

What organisations can do

- Consolidate disparate data pipelines into Fabric

- Implement vector search for internal knowledge retrieval

- Build governed AI datasets with lineage tracking

What this means for companies

Across all announcements, one trend is consistent: AI is becoming an operational layer—not an add-on.

For organisations in finance, energy, logistics, retail, or event management, this brings clear implications:

- It’s time to move from experimentation to real deployment.

- Automated agents will replace many single-purpose copilots.

- Governance frameworks must be in place before scaling.

- Integration across apps, data sources, and workflows is essential.

- AI will increasingly live inside productivity tools employees already use.

- The competitive advantage will come from how well agents connect to business processes—not from which model is used.

BigHub is well-positioned to guide you with for this transition—through personalized strategy, architecture, implementation, and optimisation.

How enterprises should prepare for 2025–2026

Here are the next steps organisations should consider:

1. Map high-value workflows for agent automation

Identify repetitive, cross-team workflows where autonomous task execution delivers value.

2. Design your agent governance framework

Define roles, access boundaries, audit controls, and operational monitoring.

3. Prepare your data infrastructure

Ensure clean, accessible, governed data that agents can safely use.

4. Integrate your productivity tools

Leverage Teams, Microsoft 365, and MCP-compatible apps to reduce friction.

5. Start with a controlled pilot

Choose one business unit or workflow to test agent deployment under monitoring.

6. Plan for organisation-wide rollout

Once guardrails are validated, scale agents into more complex processes.

From theory to practice: How BigHub prepares CVUT FJFI students for the world of data and AI

Bridging academia and real-world practice is key

At the Faculty of Nuclear Sciences and Physical Engineering of CVUT (FJFI), we are changing that. Since the 2021/2022 academic year, BigHub has been teaching full-semester courses that connect academia with the real world of data. And it’s not just lectures—students get hands-on experience with real technologies in a business-like environment, guided by professionals who deal with such projects every day.

What brought us to FJFI

BigHub has a personal connection to CVUT FJFI. Many of us—including CEO Karel Šimánek, COO Ing. Tomáš Hubínek, and more than ten other colleagues—studied there ourselves. We know the faculty produces top-tier mathematicians, physicists, and engineers. But we also know that these students often lack insight into how data and AI function in business contexts.

That’s why we decided to change it. Not as a recruitment campaign, but as a long-term contribution to Czech education. We want students to see real examples, try modern tools, and be better prepared for their careers.

Two courses, two semesters

18AAD – Applied Data Analysis (summer semester)

The first course launched in the 2021/2022 academic year, led by Ing. Tomáš Hubínek. Its goal is to give students an overview of how large-scale data work looks in practice. Topics include:

- data organization and storage,

- frameworks for big data computation,

- graph analysis,

- cloud services,

- basics of AI and ML.

Strong emphasis is placed on practical exercises. Students work in Microsoft Azure, explore different technologies, and have room for discussion. Selected lectures also feature BigHub experts who share insights from real projects.

18BIG – Data in Business (winter semester)

In 2024, we added a second course that builds on 18AAD. It is taught by doc. Ing. Jan Kučera, CSc. and doc. Ing. Petr Pokorný, Ph.D. The course goes deeper and focuses on:

- data governance and data management in organizations,

- integration architectures,

- data platforms and AI readiness,

- best practices from real-world projects.

While 18AAD shows what can be done with data, 18BIG demonstrates how it actually works inside companies.

Above-average student interest

Elective courses at FJFI usually attract only a few students. Our courses, however, enroll 20–35 students every year—an above-average number for the faculty.

Feedback is consistent: students appreciate the practical focus, open discussions, and the chance to ask professionals about real-world situations. For many, it’s their first encounter with technologies actually used in business.

.jpeg)

Beyond the classroom

Our involvement doesn’t end with teaching. Together with the Department of Software Engineering, we’ve helped revise curricula and graduate profiles, enabling the faculty to respond more flexibly to what companies in the data and AI fields really need. This improves the quality of education across the entire faculty, not just for students who take our electives.

It’s not about recruitment

Sometimes, a student later joins BigHub — but that’s not the goal. The goal is to ensure graduates aren’t surprised by how data work really looks. We want them to have broader, more practical knowledge and hands-on experience with modern tools. It’s our way of giving back to the institution that shaped us and contributing to the Czech tech ecosystem as a whole.

Collaboration with FJFI goes beyond teaching. Since BigHub’s founding, we’ve supported the student union and regularly participated in the faculty’s Dean’s Cup sports event, playing futsal, beach volleyball, and more. This year, we also submitted several grant applications together and hope to soon collaborate on joint technical projects. We believe a strong community and informal connections between students and professionals are just as important as textbook knowledge.

What’s next?

Our cooperation with CVUT FJFI is long-term. Courses 18AAD and 18BIG will continue, and we are exploring ways to expand their scope. We see that students crave practical experience and that bridging academia with real-world practice truly works. If this helps improve the quality of data and AI projects in Czech companies, it will be the best proof that our effort is worthwhile.

EU AI Act: What It is, who It applies to, and how we can help your company comply stress-free

What the AI Act is and why it was introduced

The AI Act is the first EU-wide law that sets rules for the development and use of artificial intelligence. The rationale behind this legislation is clear: only with clear rules can AI be safe, transparent, and ethical for both companies and their customers.

Artificial intelligence is increasingly penetrating all areas of life and business, so the EU aims to ensure that its use and development are responsible and free from misuse, discrimination, or other negative impacts. The AI Act is designed to protect consumers, promote fair competition, and establish uniform rules across all EU member states.

Who the AI act applies to

The devil is often in the details, and the AI Act is no exception. This legislation affects not only companies that develop AI but also those that use it in their products, services, or internal processes. Typically, companies that must comply with the AI Act include those that:

- Develope AI

- Use AI for decision-making about people, such as recruitment or employee performance evaluation

- Automate customer services, for example, chatbots or voice assistants

- Process sensitive data using AI

- Integrate AI into products and services

- Operate third-party AI systems, such as implementing pre-built AI solutions from external providers

The AI Act distinguishes between standard software and AI systems, so it is always important to determine whether a solution operates autonomously and adaptively, meaning it learns from data and optimizes its results, or merely executes predefined instructions, which does not meet the definition of an AI solution.

Importantly, the legislation applies not only to new AI applications but also to existing ones, including machine learning systems.

To save you from spending dozens of hours worrying whether your company fully complies, BigHub is ready to handle AI Act implementation for you.

What the AI Act regulates

The AI Act defines many detailed requirements, but for businesses using AI, the key areas to understand include:

1. Risk classification

The legislation categorizes AI systems by risk level, from minimal risk to high risk, and even banned applications.

2. Obligations for developers and operators

This includes compliance with safety standards, regular documentation, and ensuring strict oversight.

3. Transparency and explainability

Users of AI tools must be aware they are interacting with artificial intelligence.

4. Prohibited AI applications

For example, systems that manipulate human behavior or intentionally discriminate against specific groups.

5. Monitoring and incident reporting

Companies must report adverse events or malfunctions of AI systems.

6. Processing sensitive data

The AI Act regulates the use of personal, biometric, or health data of anyone interacting with AI tools.

Avoid massive fines

Penalties for non-compliance with the AI Act are high, potentially reaching up to 7% of a company’s global revenue, which can amount to millions of euros for some businesses.

This makes it crucial to implement the new AI regulations promptly in all areas where AI is used.

Let us handle AI Act compliance for you

Don’t have dozens of hours to study complex laws and don’t want to risk huge fines? Why not let BigHub manage AI Act compliance for your company? In collaboration with the largest czech-slovak (with international scale) law firm HAVEL & PARTNERS, we help clients worldwide implement best practices and frameworks, accelerate innovation, and optimize processes, and we are ready to do the same for you.

We offer turnkey AI solutions, including integrating AI Act compliance. Our process includes:

- Creating internal AI usage policies for your company

- Auditing the AI applications you currently use

- Ensuring existing and newly implemented AI applications comply with the AI Act

- Assessing risks so you know which AI systems you can safely use

- Mapping your current situation and helping with necessary documentation and process obligations

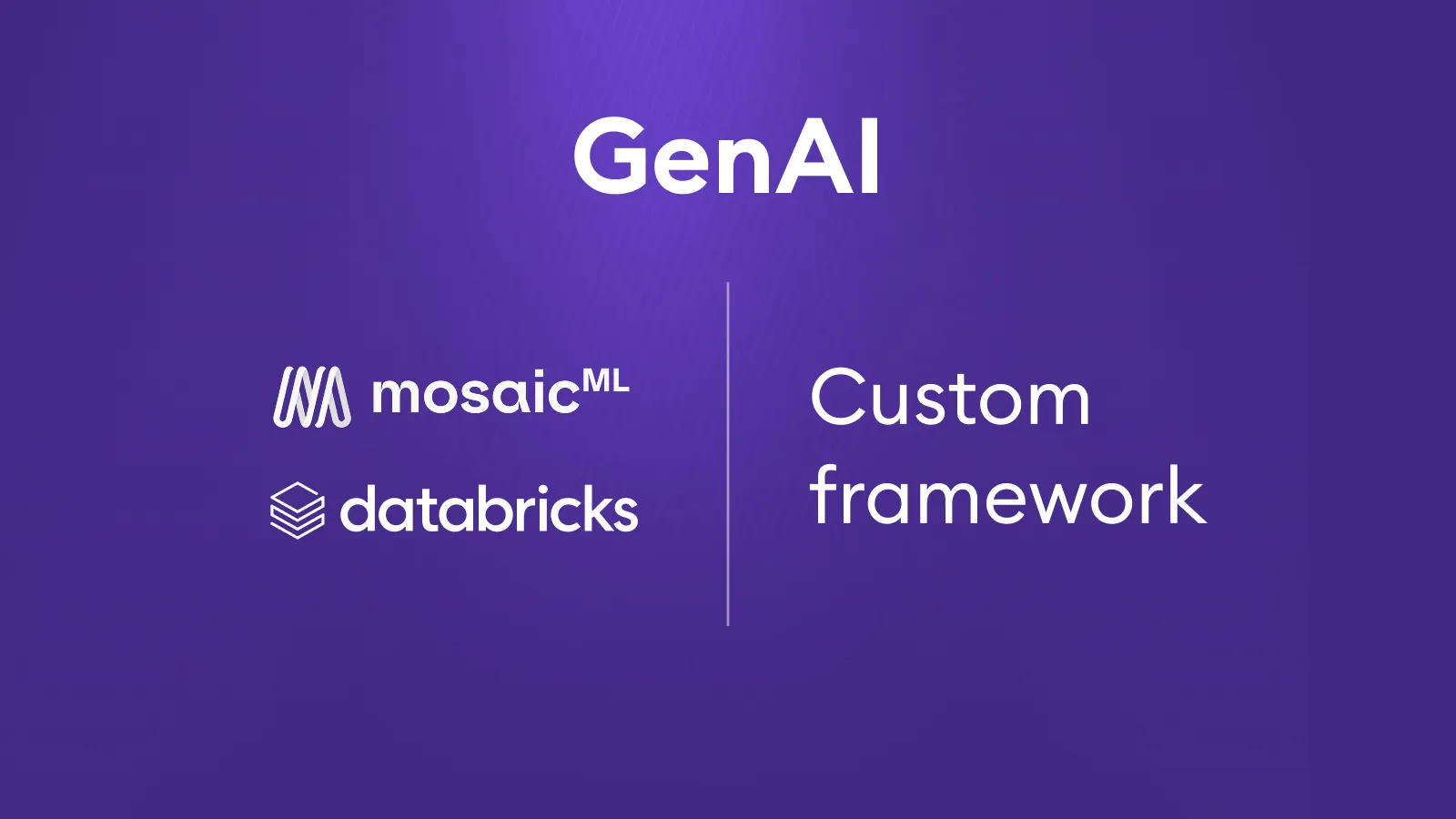

Databricks Mosaic vs. Custom frameworks: Choosing the right path for genAI

Why Companies Choose Databricks Mosaic

For organizations that already use Databricks as their data platform, it is natural to also consider Mosaic. Staying within a single ecosystem brings architectural simplicity, easier management, and faster time-to-market.

Databricks Mosaic offers several clear advantages:

- Simplicity: building internal chatbots and basic agents is quick and straightforward.

- Governance by design: logging, lineage, and cost monitoring are built in.

- Data integration: MCP servers and SQL functions allow agents to work directly with enterprise data.

- Developer support: features like Genie (a Fabric Copilot competitor) and assisted debugging accelerate development.

For straightforward scenarios, such as internal assistants working over corporate data, Databricks Mosaic is fast and effective. We’ve successfully deployed Mosaic for a large manufacturing company and a major retailer, where the need was simply to query and retrieve data.

Where Databricks Mosaic Falls Short

More complex projects introduce very different requirements – around latency, accuracy, multi-agent logic, and integration with existing enterprise systems. Here, Databricks Mosaic quickly runs into limits:

- Structured output: Databricks Mosaic cannot effectively enforce structured output, which impacts the quality and operational stability of various solutions (e.g., voicebots or OCR).

- Multi-step workflows: processes such as insurance claims, underwriting, or policy issuance are either unfeasible or overly complicated within Databricks Mosaic.

- Latency-sensitive scenarios: Databricks Mosaic adds an extra endpoint layer between user and model, which makes low-latency use cases difficult.

- Integration outside Databricks: unless you only use Vector Search and Unity Catalog, connecting to other systems is more complex than in a Python-based custom framework.

- Limited model catalog: only a handful of models are available. You cannot bring your own models or integrate models hosted in other clouds.

Even Databricks itself admits Mosaic isn’t intended to replace specialized frameworks. That’s true to a degree, but the overlap is real – and in advanced use cases, Mosaic’s lack of flexibility becomes a bottleneck.

Where a Custom Framework Makes Sense

A custom framework shines where projects demand complex logic, multi-agent orchestration, streaming, or low-latency execution:

- Multiple agents: agents with different roles and skills collaborating on a single task.

- Streaming and real-time: essential for call centers, voicebots, and fraud detection.

- Custom logic: precisely defined workflows and multi-step processes.

- Regulatory compliance: full transparency and auditability in line with the AI Act.

- Flexibility: ability to use any libraries, models, and architectures without vendor lock-in.

This doesn’t mean Databricks Mosaic can’t ever be used for business-critical workloads – in some cases it can. But in applications where latency, structured output, or high precision are non-negotiable, Mosaic is not yet mature enough.

How BigHub Approaches It

From our experience, there’s no one-size-fits-all answer. Databricks Mosaic works well in some contexts, while in others a custom framework is the only viable option.

- Manufacturing & Retail: We used Databricks Mosaic to build internal assistants that answer queries over corporate data (SQL queries). Deployment was fast, governance was embedded, and the solution fit the use case perfectly.

- Insurance (Claims Processing): Here, Databricks Mosaic simply wasn’t sufficient. It lacked structured output, multi-agent orchestration, and voice processing. We delivered a custom framework that achieved the required accuracy, supported multi-step workflows, and met audit requirements under the AI Act.

- Banking (Underwriting, Policy Issuance): Banking workflows often involve multiple steps and integration with core systems. Implementing these in Databricks Mosaic is overly complex. We used a custom middleware layer that orchestrates multiple agents and supports models from different clouds.

- Call Centers & OCR: Latency-critical applications and use cases requiring structured outputs (e.g. form data extraction, voicebots) are not supported by Databricks Mosaic. These are always delivered using custom solutions.

Our role is not to push a single technology but to guide clients toward the best choice. Sometimes Databricks Mosaic is the right fit, sometimes a custom framework is the only way forward. We ensure both a quick start and long-term sustainability.

Our Recommendation

- Databricks Mosaic: best suited for organizations already invested in Databricks that want to deploy internal assistants or basic agents with strong governance and monitoring.

- Custom framework: the right choice when projects require complex multi-step workflows, multi-agent orchestration, structured outputs, or low latency.

At BigHub, we’ve worked extensively with both approaches. What we deliver is not just technology, but the expertise to recommend and build the right combination for each client’s unique situation.

Why MCP might be the HTTP of the AI-first era

What Is MCP – and Why Should You Care?

Model Context Protocol may sound like something out of an academic paper or internal Big Tech documentation. But in reality, it’s a standard that enables different AI systems to seamlessly communicate—not just with each other, but also with APIs, business tools, and humans.

Today’s AI tools—whether chatbots, voice assistants, or automation bots—are typically limited to narrow tasks and single systems. MCP changes that. It allows intelligent systems to:

- Check your e-commerce order status

- Review your insurance contract

- Reschedule your doctor’s appointment

- Arrange delivery and payment

All without switching apps or platforms. And more importantly: without every company needing to build its own AI assistant. All it takes is making services and processes “MCP-accessible.”

From AI as a Tool to AI as an Interface

Until now, AI in business has mostly served as a support tool for employees—helping with search, data analysis, or faster decision-making. But MCP unlocks a new paradigm:

Instead of building AI tools for internal use, companies will expose their services to be used by external AI systems—especially those owned by customers themselves.

That means the customer is no longer forced to use the company’s interface. They can interact with your services through their own AI assistant, tailored to their preferences and context. It’s a fundamental shift. Just as the web changed how we accessed information, and mobile apps changed how we shop or travel, MCP and intelligent interfaces will redefine how people interact with companies.

The AI-First Era Is Already Here

It wasn’t long ago that people began every query with Google. Today, more and more users turn first to ChatGPT, Perplexity, or their own digital assistant. That shift is real: AI is becoming the entry point to the digital world.

“Web-first” and “mobile-first” are no longer enough. We’re entering an AI-first era—where intelligent interfaces will be the first layer that handles requests, questions, and decisions. Companies must be ready for that.

What This Means for Companies

1. No More Need to Build Your Own Chatbot

Companies spend significant resources building custom chatbots, voice systems, and interfaces. These tools are expensive to maintain and hard to scale.

With MCP, the user shows up with their own AI system and expects only one thing: structured access to your services and information. No need to worry about UX, training models, or customer flows—just expose what you do best.

2. Traditional Call Centers Become Obsolete

Instead of calling your support line, a customer can query their AI assistant, which connects directly to your systems, gathers answers, or executes tasks.

No queues. No wait times. No pressure on your staffing model. Operations move into a seamless, automated ecosystem.

3. New Business Models and Brand Trust

Because users will bring their own trusted digital interface, companies no longer carry the burden of poor chatbot experiences. And thanks to MCP’s built-in structure for access control and transparency, businesses can decide who sees what, when, and how—while building trust and reducing risks.

What This Means for Everyday Users

- One interface for everything

- No more juggling dozens of logins, websites, or apps. One assistant does it all.

- True autonomy

- Your digital assistant can order products, compare options, request refunds, or manage appointments—no manual effort required.

- Smarter, faster decisions

- The system knows your preferences, history, and goals—and makes intelligent recommendations tailored to you.

Practical example:

You ask your AI to generate a recipe, check your pantry, compare prices across online grocers, pick the cheapest options, and schedule delivery—all in one go, no clicking required.

The Underrated Challenge: Data

For this to work, users will need to give their AI systems access to personal data. And companies will need to open up parts of their systems to the outside world. That’s where trust, governance, and security become mission-critical. MCP provides a standardized framework for managing access, ensuring safety, and scaling cooperation between systems—without replicating sensitive data or creating silos.

AI Agents: What they are and what they mean for your business

🧠 What Are AI Agents?

An AI agent is a digital assistant capable of independently executing complex tasks based on a specific goal. It’s more than just a chatbot answering questions. Modern AI agents can:

- Plan multiple steps ahead

- Call APIs, work with data, create content, or search for information

- Adapt their behavior based on context, user, or business goals

- Work asynchronously and handle multiple tasks simultaneously

In short, an AI agent functions like a virtual employee — handling tasks dynamically, like a human, but faster, cheaper, and 24/7.

Why Are AI Agents Trending Right Now?

- Advancements in large language models (LLMs) like GPT-4, Claude, and Mistral allow agents to better understand and generate natural language.

- Automation is becoming goal-driven — instead of saying “write a script,” you can say “find the best candidates for this job.”

- Companies want to scale without increasing costs — AI agents can handle both routine and analytical tasks.

- Productivity and personalization are top priorities — AI agents enable both in real time.

What Do AI Agents Bring to Businesses?

✅ 1. Save Time and Costs

Unlike traditional automation focused on isolated tasks, AI agents can manage entire workflows. In e-commerce, for example, they can:

- Help choose the right product

- Recommend accessories

- Add items to the cart

- Handle complaints or returns

✅ 2. Boost Conversions and Loyalty

AI agents personalize conversations, learn from interactions, and respond more precisely to customer needs.

✅ 3. Team Relief and Scalability

Instead of manually handling inquiries or data, the agent works nonstop — error-free and without the need to hire more people.

✅ 4. Smarter Decision-Making

Internal agents can assist with competitive analysis, report generation, content creation, or demand forecasting.

AI Agents in Practice

AI Agent vs. Traditional Chatbot: What's the Difference?

What Does This Mean for Your Business?

Companies that implement AI agents today gain an edge — not just in efficiency, but in customer experience. In a world where “fast replies” are no longer enough, AI agents bring context, intelligence, and action — exactly what the modern customer expects.

What’s Next?

AI agents are quickly evolving from assistants to full digital colleagues. Soon, it won’t be unusual to have an “AI teammate” handling tasks, collaborating with your team, and helping your business grow.

GenAI Is not the only type of AI: What every business leader should know

🧠 What Is Generative AI (GenAI)?

Generative AI focuses on creating content — text, images, video, or code — by using large language models (LLMs) trained on huge datasets.

Typical use cases:

- Writing emails, articles, product descriptions

- Generating graphics and images

- Creating code or marketing copy

- Customer support via AI-powered chat

But despite its capabilities, GenAI isn't a one-size-fits-all solution.

What Other Types of AI Exist?

✅ 1. Analytical AI

This type of AI focuses on analyzing data, identifying patterns, and making predictions. It doesn't generate content but provides insights and decisions based on logic and data.

Use cases:

- Predicting customer churn or lifetime value

- Credit risk scoring

- Fraud detection

- Customer segmentation

✅ 2. Optimization AI

Rather than analyzing or generating, this AI finds the best possible solution based on a defined goal or constraint.

Use cases:

- Logistics and transportation planning

- Dynamic pricing

- Manufacturing and workforce scheduling

✅ 3. Symbolic AI (Rule-Based Systems)

This older but still relevant form of AI uses logic-based rules and decision trees. It is explainable, auditable, and reliable — especially in regulated environments.

Use cases:

- Legal or medical expert systems

- Regulatory compliance

- Automated decision-making in banking or insurance

✅ 4. Reinforcement Learning

This AI learns by trial and error in dynamic environments. It’s used when the system needs to adapt based on feedback and outcomes.

Use cases:

- Autonomous vehicles

- Robotics

- Complex process automation

When Should (or Shouldn’t) You Use GenAI?

What Does This Mean for Your Business?

If you're only using GenAI, you might be missing out on significant potential. The real value lies in combining AI types.

Example:

- Use Analytical AI to segment your customers.

- Use GenAI to generate personalized emails for each segment.

- Use Optimization AI to time and target campaigns efficiently.

This multi-layered approach delivers better ROI, reliability, and strategic depth.

Summary: GenAI ≠ All of AI

Get your first consultation free

Want to discuss the details with us? Fill out the short form below. We’ll get in touch shortly to schedule your free, no-obligation consultation.

.avif)